One of the newer features of SUSE Manager is the Virtual Host Manager. This allows the SUSE Manager server to connect to the AWS Cloud and gather information about instances running there. This detail can then be displayed in the SUSE Manager Web UI.

For customers managing their own subscription on AWS, this data can be useful when performing operations such as subscription matching.

In order to configure the VHM and connect to an AWS account, the following steps should be followed:

Firstly, install the required packages.

We need to provide a mechanism to let SUSE Manager connect to AWS, this is provided via the ‘virtual-host-gatherer-libcloud’ package. This is not installed by default when launching a SUSE Manager instance from the images published in AWS, but once it is registered with the SUSE Customer Center, the latest version of the package is available in the ‘SLE-Module-SUSE-Manager-Server-4.x-Updates’ channel.

Secondly, Connect SUSE Manager to AWS

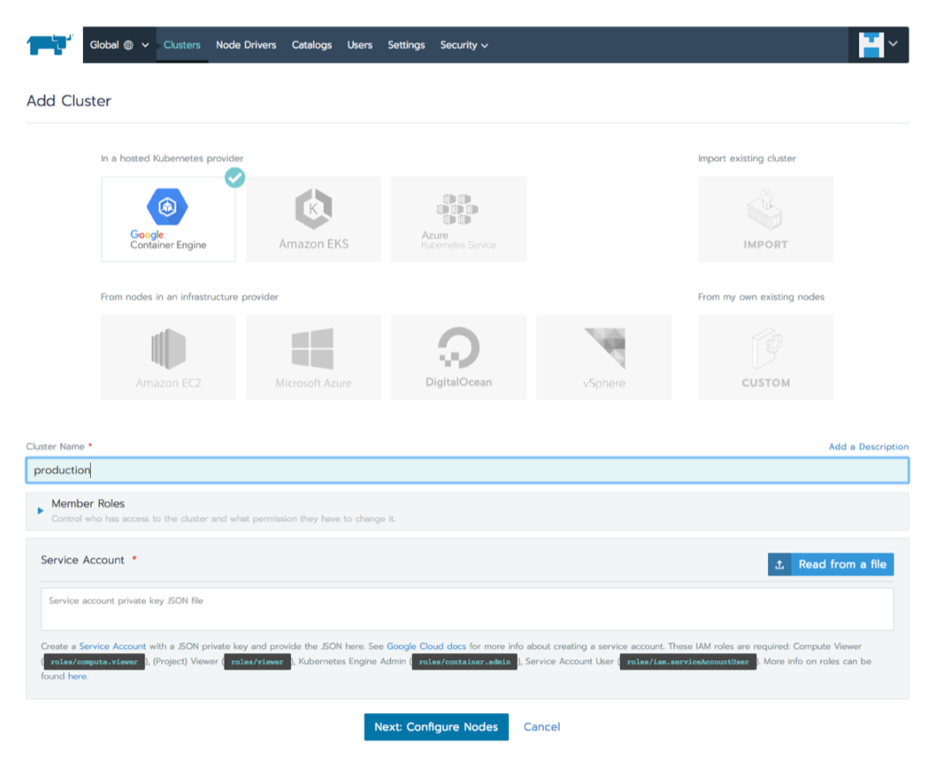

In the SUSE Manager UI from the Systems > Virtual Host Manager menu, click create and select AWS EC2 from the drop-down menu and fill out the required fields. It is on this page where the AWS Access ID and Secret Access Key are provided and enable SUSE Manager to gather the instance information.

The Least Privilege

One question that gets asked regularly, and the reason for this article, is ‘Which AWS permissions are required for the Virtual Host Manager to function?’

The standard security advice when using AWS is to always grant the least privilege possible for a task to be performed, so using the Access Key for a user with excessive permissions to AWS is not advised.

In order for SUSE Manager to gather the information required from AWS, the VHM needs permission to describe EC2 instances and addresses. One method to grant this is to create a new IAM user specific to this task, create a policy as below and attach to the user.

{

"Version": "2012-10-17",

"Statement":[

{

"Effect": "Allow",

"Action": [

"ec2:DescribeAddresses",

"ec2:DescribeInstances"

],

"Resource": "*"

}

]

}

You can limit permissions further by restricting access to specific regions. Additional detail on creating ‘read-only’ users in AWS can be found at:

https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ExamplePolicies_EC2.html#iam-example-read-only

Monitoring Activity

For the very curious, it’s also possible to monitor the AWS operations that the Virtual Host Manager sends to AWS. The gatherer.log file in the /var/log/rhn/ directory will provide detail of both the requests sent to the EC2 Endpoint from SUSE Manager and the responses back.

2021-03-17 11:11:54 urllib3.connectionpool - DEBUG: https://ec2.eu-west-2.amazonaws.com:443 "GET /?Action=DescribeInstances&Version=2016-11-15 HTTP/1.1" 200

2021-03-17 11:11:54 urllib3.connectionpool - DEBUG: https://ec2.eu-west-2.amazonaws.com:443 "GET /?Action=DescribeAddresses&Version=2016-11-15 HTTP/1.1" 200

To see this level of output in the gatherer log, the debug level of logging should be temporarily increased.

Finally, A big thank you to Pablo Suárez Hernández from the SUSE Engineering for bringing his SUSE Manager knowledge to this.

Links

Check out the SUSE Manager Client Configuration Guide in the SUSE Documentation site at:

https://documentation.suse.com/#suma