How CaaSP helps in Microservice (MSA) & PaaS?

To answer these questions, let’s first break the ice for containerization and why there is a need for Containers. Container technology was introduced back in 2006 — even before the preparations were done for running containers.

What initiated containerization? Virtualization technology has been in existence for long time and is pretty much mature.

It’s true that Virtual machines (VM) are good options and have been used a lot, especially in cloud development and cloud applications/infrastructure, but there were gaps which were identified with growth in market needs. Listed are some of the gaps that led to the invention of containers and containerization:

- Failover. VMs are not able to really deliver high SLA for failover. For every failing VM, a complete virtualized machine has to be built (install the operating system, configure the network and install the application above it as well as configuring it).

While we do have some solutions today for limiting this gap but they are not fully closed especially if the SLA to be delivered for an Application is high as 99.99.

- Complexity of the solution. A VM is still a VM; it has a rich operating system, so it is expected that it will be much complex to install drivers, configure security and install/deploy application above it.

- You might ask, “Why does complexity matter?” Complexity always matters as it highly impacts cost, maintenance, upgrades and the service delivered by the solution.

- Cost of horizontal scaling and limitation of the vertical scaling. Another question you might ask is “Why do we need scaling? Let’s just do the planning of the resources ahead of time.” True, you can plan for all the possible resources ahead, but you will cost a lot – really a lot – with no benefit and no revenue. For example let’s check out, LinkedIn, Facebook, Twitter, or any other social platform. If all these social platforms prepare for the worst case scenario, so that the service is never down and always serving their clients, they would need to provision resources for all humans living on earth getting accessing their networks at the same exact time. That’s a lot of money, especially if you have variable load in the equation. So the answer then is scaling, adding just the required resources when there is a load introduced which cannot be delivered by the current provisioned resources.

- So now you’re probably asking, “But how is that really linked to VM, VMs should resolve that easily?” The short answer to that question is no. VMs are built on the concept of monolithic applications. Monolithic applications are not as granular, so when there is a need to scale horizontally we would to add a completely new VM with the full setup of the application, even though the load is one single service or a couple of modules. Bottom line here is: scaling horizontally using VMs is really expensive and not cost efficient. Remember, there is always a limit to the vertical scaling, which will vary based on the underling infrastructure and the used operating system and runtime.

- Time. Time really matters especially in cloud development and the digital era – the future of our smart world. So the question, “Will building a new VM with platform installation, application installation, its configurations (security, integration, app configurations,…) and so on, be able to deliver the expected speed required by the market?” The answer is, probably not, as a VM is still a complex and large footprint. Applying a patch, or upgrade, or even performing a restart or rebuild, of a VM is time consuming.

So do containers magically fix all the former problems?

Yes, containers introduce some principles for resolving the above issues.

First, a container is essentially a lightweight standalone application that holds all its dependencies and can run by itself. How does that resolve the former issues? Let’s take a look:

- Failover. A container has a very small footprint (this is the main of aim of containerization is to break down the solution into small chunks, let’s call those chunks Microservices (MSA)).

- How does that help with failover? With a container, we can build and provision a failed service in nanoseconds. And if the image used by the container is properly designed, it can even take much smaller amount of time.

- Containers enable an MSA design pattern. Additionally, a container doesn’t really run a normal operating system, since it is supposed to only run MSAs. To that end, containers use a kind of “micro operating system”, a minimal operating system that is of a very small footprint. Replacing a failed container is not a massive process at all.

- Complexity of the solution. Containers drastically simplify things –this is a basic architecture principle for containerization. By only running stuff that is needed by the application or MSA the container represents. This means there is no need to run a complete operating system and no need to know and care about the underlying host (making the container portable.). A container is everything that the app or MSA needs and nothing that it doesn’t need.

- Cost of horizontal scaling and limitation of the vertical scaling. This is really one of the benefits of containerization, as a container are built to run granular and standalone applications. If a load happens on a container, we simply add more containers – delivering the same horizontal scaling in a cost efficient manner. And because we are containerized, vertical scaling is no longer necessary.

- Time. There is no real competition between VMs and containers from a time perspective, containers are much more lighter and faster than VMs.

So do containers replace VMs completely?

The answer of course is not entirely. Let’s first look at when containers should be used. Here are just some of the reasons, you should use containerization over VMs:

- If the load is a variable.

- If frequent changes are required.

- If the solution is already complex.

- If the delivered solution is built as a service (SaaS or PaaS)

- If the solution is multi-cloud or targeting multi-cloud

How is that related to PaaS and SUSE CaaS Platform?

Let us first ask one simple question before getting into that, “Who will orchestrate the containers and make sure to scale when needed or upgrade base on the needs?” And the answer to that question is Kubernetes (K8s), the market leader in container orchestration.

So now that we know the who, the next question is,”What is meant by container orchestration?’

Let’s answer the question by giving examples for container orchestration activities:

- Grouping and orchestrating containers together so that they act as one standalone, deployable unit, in K8s it is named a POD. This really supports the design principles of MSA (loosely coupling, granularity…)

- Making sure that for each application a minimum number of PODs are always active and available.

- Deploying a new POD (a container or a group of containers)

- Managing and preparing external runtime dependencies and resources for PODs (such as, network or storage access, load balancing, or routing between PODs for accessing backend services or for integration in general.)

- Ports management, in a K8s world it is named service proxy

- Service discovery and routing.

How is K8s linked to the PaaS era? K8s is a PaaS by itself; it is a platform where tenants can be defined. Tenants are defined using namespaces while organizations using the namespaces together with security implementation (for example RBAC).

- How is that linked to SUSE CaaS Platform? SUSE CaaS Platform uses upstream K8s as a base PaaS platform, extending it with more features such as off the the shelf monitoring and logging and others.

Finally, the big question: how does SUSE CaaS Platform help in the development of an MSA?

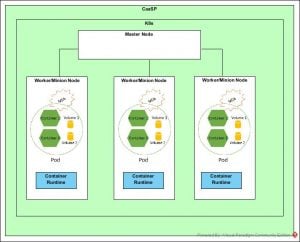

SUSE Caas Platform delivers PaaS using K8s, in conjunction with either CRI-O or Docker as an image runtime, so SUSE CaaS Platform is a PaaS which enables running granular containers acting as MSAs. Below is an architecture to demonstrate how SUSE CaaS Platform enables granular containers

Rania Mohamed is a solution architect with SUSE Global Services. For more information on how SUSE Global Services can help with your deployment of SUSE CaaS Platform, visit suse.com/services/consulting.

Related Articles

Oct 28th, 2022

Replace PSP with Kubewarden policy

Feb 15th, 2023

Comments

I think i am a right place for finding best info. Realy you are doing best.I am seriously very happy because of well information Please keep it up.

Thanks :). I am also happy on sharing my technical ideas, solutions and observations.