SUSE Enterprise Storage delivers best CephFS benchmark on Arm

Since its introduction in 2006, Ceph has been used predominantly for volume-intensive use cases where performance is not the most critical aspect. Because the technology has many attractive capabilities, there has been a desire to extend the use of Ceph into areas such as High-Performance Computing. Ceph deployments in HPC environments are usually as an active archival (tier-2 storage) behind another parallel file system that is acting as the primary storage tier.

We have seen many significant enhancements in Ceph technology. Contributions to the open source project by SUSE and others has led to new features, considerably improved efficiency, and made it possible for Ceph-based software defined storage solutions to provide a complete end-to-end storage platform for an increasing number of workloads.

Traditionally, HPC data storage has been very specialised to achieve the required performance. With the recent improvements in Ceph software and hardware and in network technology, we now see the feasibility of using Ceph for tier-1 HPC storage.

The IO500 10-node benchmark is an opportunity for SUSE to showcase our production-ready software defined storage solutions. In contrast to some other higher ranked systems, this SUSE Enterprise Storage Ceph-based solution that we benchmarked was production-ready, with all security and data protection mechanisms in place.

This was a team effort with Arm, Ampere, Broadcom, NVIDIA, Micron and SUSE to build a cost-effective, highly-performant system available for deployment.

The benchmark

The IO500 benchmark of IO performance combines scores for several bandwidth intensive and metadata intensive workloads to generate a composite score. This score is then used to rank various storage solutions. Note that the current IO500 benchmark does not account for cost or production readiness.

There are two lists for IO500 based upon the number of client nodes. The first list is for unlimited configurations and the second list is for what is termed the “10-node challenge”. ( More IO500 details) We chose the “10-node challenge” for this benchmark attempt.

Our previous benchmark was also for the 10-node challenge and it had a score of 12.43 on a cluster we called “Tigershark”. We created a new cluster this year, called “Hammerhead”, continuing with the shark theme.

The solution

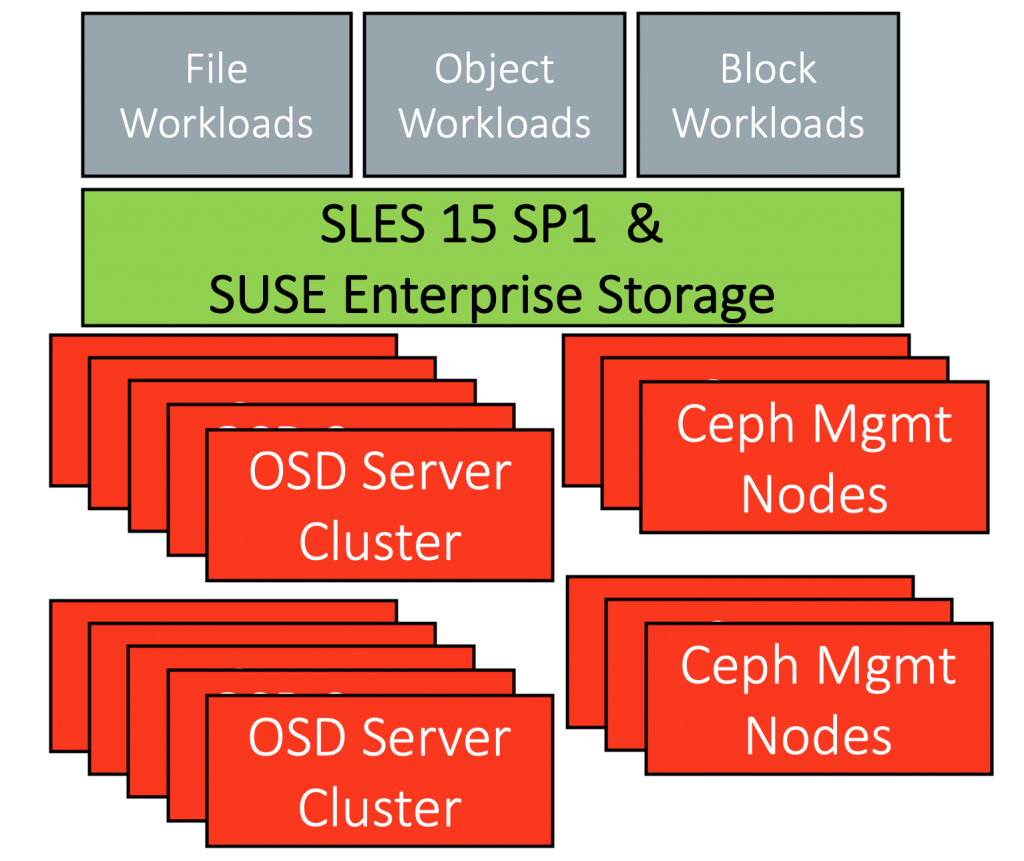

There have been no major updates to the software stack used for the 2019 IO500 benchmark. The operating system was SLES 15 Service Pack 1 with SUSE Enterprise Storage version 6, based on the Ceph version “Nautilus”.

Our goal was to beat our score from last year of 12.43 that was done (11/19 IO500 list) using an entirely different hardware platform and to validate the performance of an Arm-based server. We believe that any system we benchmark should emulate a production system that a customer may deploy, therefore all appropriate Ceph security and data protection features were enabled during the benchmark.

The hardware we used this year is substantially different even though it looks very similar. We used a Lenovo HR350A system, based on the Ampere Computing eMAG processor. The eMAG is a single socket 32 core ARM v8 architecture processor.

The benchmark was performed on a cluster of ten nodes as the data and metadata servers. Each server has 128GB of RAM and four Micron 7300PRO 3.84TB NVMe SSDs. These NVMe SSDs are designed for workloads that demand high throughput and low latency while staying within a capital and power consumption budget. Broadcom provided the HBA adapters in each storage node.

The cluster was interconnected with Mellanox’s Ethernet Storage Fabric (ENF) networking solution. The NVIDIA technology provides a high-performance networking solution to eliminate data communication bottlenecks associated with transferring large amounts of data and is designed for easy scale-out deployments. ENF includes a ConnectX-6 100GbE NIC in each node connected by a SN3700 Spectrum 100GbE switch.

Link to the technical reference architecture

Cluster configuration

SLES Configuration

Each node was set up to disable CPU mitigations.

Network configuration is set up to use JUMBO frames with an MTU of 9000. We also increased the device buffers on the NIC and changed a number of sysctl parameters.

We used the tuned “throughput-performance” profile for all systems.

The last change was to alter the IO scheduler on each of the NVMEs and set it to “none”.

ip link set eth0 mtu 9000 setpci -s 0000:01:00.0 68.w=5950 ethtool -G eth0 rx 8192 tx 8192 sysctl -w net.ipv4.tcp_timestamps=0 sysctl -w net.ipv4.tcp_sack=0 sysctl -w net.core.netdev_max_backlog=250000 sysctl -w net.core.somaxconn=2048 sysctl -w net.ipv4.tcp_low_latency=1 sysctl -w net.ipv4.tcp_rmem="10240 87380 2147483647" sysctl -w net.ipv4.tcp_wmem="10240 87380 2147483647" sysctl -w net.core.rmem_max=2147483647 sysctl -w net.core.wmem_max=2147483647 systemctl enable tuned systemctl start tuned tuned-adm profile throughput-performance echo "none" >/sys/block/nvme0n1/queue/scheduler

CEPH Configuration

For the SUSE Enterprise Storage (SES) configuration, we deployed four OSD processes to each NVME device. This meant that each server was running with 16 OSD processes against four physical devices.

We ran a metadata service on every Arm node in the cluster which meant we had 12 active metadata services running.

We increased the number of PGs to 4096 for the metadata and data pools to ensure we had an even distribution of data. This is in line with the recommended number of PGs per OSD of between 50-100.

We also set the data protection of the pools to be 2X as we did in our last set of benchmarks. This is to make sure that any data that is written is protected.

As mentioned previously, we also left authentication turned on for the cluster to emulate what we would see in a production system.

The ceph.conf configuration used:

[global] fsid = 4cdde39a-bbec-4ef2-bebb-00182a976f52 mon_initial_members = amp-mon1, amp-mon2, amp-mon3 mon_host = 172.16.227.62, 172.16.227.63, 172.16.227.61 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx public_network = 172.16.227.0/24 cluster_network = 172.16.220.0/24 ms_bind_msgr2 = false # enable old ceph health format in the json output. This fixes the # ceph_exporter. This option will only stay until the prometheus plugin takes # over mon_health_preluminous_compat = true mon health preluminous compat warning = false rbd default features = 3 debug ms=0 debug mds=0 debug osd=0 debug optracker=0 debug auth=0 debug asok=0 debug bluestore=0 debug bluefs=0 debug bdev=0 debug kstore=0 debug rocksdb=0 debug eventtrace=0 debug default=0 debug rados=0 debug client=0 debug perfcounter=0 debug finisher=0 [osd] osd_op_num_threads_per_shard = 4 prefer_deferred_size_ssd=0 [mds] mds_cache_memory_limit = 21474836480 mds_log_max_segments = 256 mds_bal_split_bite = 4

The Results

This benchmark effort achieved a score of 15.6. This is the best CephFS IO500 benchmark on an Arm-based platform to date. This score was an improvement of 25% over last year’s results on the “Hammerhead” platform. The score put this configuration in the 27th place in the IO500 10-node challenge, two places above our previous benchmark.

During the benchmark, we saw that the Ceph client performance metrics could easily hit peaks in excess of 16GBytes/s for write performance with large file writes. Because we were using production-ready settings for 2X data protection policy, this means that the Ceph nodes were achieving 3GB/s I/O performance.

One of the most significant findings during this testing was the power consumption, or rather the lack of it. We used ipmitool to measure the power consumption on a 30 second average. The worst case 30 second average was only 152 watts, significantly less than we saw with last year’s benchmark.

In addition to the performance improvement and power savings, we believe this cluster would cost up to 40% less than last year’s configuration.

Conclusion

The purpose-built storage solutions used in HPC come at a significant cost. With the data volume increasing exponentially for HPC and AI/ML workloads, there is considerable pressure on IT departments to optimise their expenses. This benchmark demonstrates that storage solutions based on innovative software and hardware technology can provide additional choices for companies struggling to support the amount of data needed in HPC environments.

The partnership with Ampere, Arm, Broadcom, NVIDIA, Micron and SUSE yielded a new, highly-performant, power efficient platform – a great storage solution for any business or institution seeking a cost-effective, low power alternative.

Partner Voices

Ampere Computing https://amperecomputing.com/ampere-claims-the-top-cephfs-storage-cluster-on-arm-in-io500s-10-node-challenge/

Related Articles

Apr 15th, 2024

Install your HPC Cluster with Warewulf

Jun 15th, 2022

No comments yet