CPU Isolation – Introduction – by SUSE Labs (part 1)

This blog post is the first in a technical series by SUSE Labs team exploring Kernel CPU Isolation along with one of its core components: Full Dynticks (or Nohz Full). Here is the list of the articles for the series so far :

- CPU Isolation – Introduction

- CPU Isolation – Full dynticks internals

- CPU Isolation – Nohz_full

- CPU Isolation – Housekeeping and tradeoffs

- CPU Isolation – A practical example

- CPU Isolation – Nohz_full troubleshooting: broken TSC/clocksource

CPU isolation is a powerful set of features that can be found behind the settings for workloads which rely on specific and often extreme latency or performance requirements. Some DPDK (Data Plane Development Kit) usecases can cover such examples. However CPU isolation’s documentation and footnotes for many subtleties remain scattered at best if not lagging behind recent developments. It’s not always easy to sort out the benefits and tradeoffs hiding behind the existing range of tunings. Our series of articles aims at shedding some light and guide the users throughout this obscure Linux Kernel subsystem that we maintain both upstream and in our SLE15 products.

Back to basics

The role of a kernel is to provide elementary services in order to use the hardware resources through a unified interface. This is the ground on which your workload walks.

An analogy could bring us to city infrastructures: roads, energy, water supply, sewerage stand underneath to support human activity. Infrastructure is precisely what everyone expects to be transparent and reliable. We want it to work and wish we never hear about its sole existence. Yet sometimes we have to, because every infrastructure eventually needs maintenance.

The kernel behaves in a similar fashion. It provides services through synchronous requests, using system calls, and maintains its duty and internal state using asynchronous processing such as interrupts, timers and kernel threads. This is of course a simplified picture sparing lots of uninteresting details for now.

Housekeeping and kernel noise

If some of this asynchronous work can have visible impact to the user, such as page reclaim (memory swap operations) , the rest is a large part that goes unnoticed. Timers and interrupts are meant to execute in very short periods of time (microseconds usually) and many kernel threads, among which are workqueues, shouldn’t push it too far either, especially since their CPU time gets balanced by the scheduler. These kernel asynchronous snippets are traditionally called “housekeeping” work. Some of them can be bound to a specific CPU, others can be unbound and can therefore execute on any CPU.

Now if generalist user workloads won’t get burdened by kernel housekeeping, some more specialized needs may clearly stumble on the noise within their way. This is the case for processing that require the entire CPU time and can’t suffer any cycle theft. DPDK is one such example where high bandwidth networking packets are polled directly from userspace and any tiny disturbance from the kernel can cause packet loss. This random noise is usually referred to as “jitter” and other kinds of workloads may aim at getting closer to a jitter-free CPU to achieve their goals: e.g., virtualization hosts that want to maximize CPU resources for the guest, CPU bound benchmarks for stable results, specific real time needs, etc…

The timer tick

Let us start exploring more in detail the world of kernel housekeeping with the timer tick. This very useful central component of the kernel has been historically a source of disturbance not easy to get rid of. The tick is a periodic timer interrupt executing on each CPU at a frequency ranging from 100 to 1000 Hz, though some architectures propose fancier values. It performs many jobs:

- Run expired general purpose timer callbacks

- Elapse posix CPU timers and run those that have expired

- Timekeeping: maintain internal clock (jiffies) and external clock (gettimeofday())

- Scheduler: maintain internal state, fairness and priorities (task preemption)

- Maintain global load average

- Maintain perf events, etc…

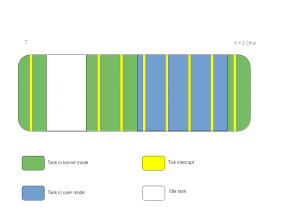

It is no surprise that such an interrupt executing 100 to 1000 times a second can be an issue for extreme workloads relying on an undisturbed, jitter-free CPU. Although fast, these interrupts still steal a few CPU cycles and can trash the CPU cache, resulting in cache misses when the user task resumes after the interrupts. So we want to spare the tick on such workload. This problem can’t be solved easily because the timer tick of a CPU can’t be affine to a different CPU just like many other hardware IRQs. It can’t be threaded either. And fundamentally, given the nature of its work, the issue has to be sorted out locally on the CPU scope. As a matter of fact, until 2.6.21 (2007), there was no mechanism at all to break the periodicity of the tick. Its behaviour looked like the following picture:

Figure 1: The periodic timer tick implementation

Here the tick fires blindly and interrupts the CPU all the time, whether it runs in kernel space, user space or idle. This layout can still be restored with CONFIG_HZ_PERIODIC. Back in 2007, the first issue that had to be solved against this implementation was to optimize power consumption. Indeed when the CPU is idle, the tick shouldn’t be needed because there is no real work to do and the CPU could benefit from a break with periodic interrupts in order to enter into low power mode. This is how CONFIG_NO_HZ_IDLE (formerly known as CONFIG_NO_HZ) got introduced which stopped the periodic tick on idle entry and rearmed it on idle exit. Our picture has then looked like that ever since on generalist workloads:

Figure 2: The dynticks-idle timer tick implementation

Now remember that with workloads targeting jitter-free CPUs, we are interested in running our actual task without the tick. And this is what we are going to explore in the 2nd part of this series.

Related Articles

Jun 28th, 2023

SUSECON 2023 – It’s all about choice

Apr 16th, 2024

Unlock the Easiest Path to HA SQL Server in Kubernetes

Oct 31st, 2023

Comments

Great series! Thanks a lot Frédéric!