RBD vs. iSCSI: How best to connect your hosts to a Ceph cluster

SUSE Enterprise Storage is a versatile Ceph storage platform that enables you to get block, object and file storage all in one solution, but knowing how best to connect your virtual and bare-metal machines to a Ceph cluster can be confusing.

In this blog post, I’ll give you a quick overview to get you started with block storage using either the rbd kernel module or iSCSI.

System considerations

How you plan to use Ceph determines how best to deploy and tune it. That is, if you’re planning to connect the storage cluster resources to virtual machines so you can use it to store OS disks or MariaDB databases, those are different from using it to store large video objects. Performance will vary by the amount of memory and CPU resources you can commit and whether you have spinning disks or SSDs.

Generally speaking, you can improve Ceph’s block storage capabilities by committing more RAM and CPU and using SSDs for the journaling disks, which are where Ceph keeps track of all the data it spreads across your physical devices and OSDs.

Keep in mind, too, that “speed” of a Ceph cluster is the result of a number of factors, including the network that connects everything. An optimized cluster – one with a good balance of RAM and CPUs to OSDs – can write to the cluster at I/O rates that exceed 10 Gb/second. In those cases, network throughput becomes your bottleneck, not the capacity of your storage servers, though you need a sufficient number of devices and nodes to achieve 10 Gb speeds.

The core Ceph block technology: RBD

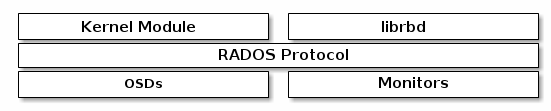

The block storage technology in Ceph is RADOS (Reliable Autonomic Distributed Object Store), which can scale to thousands of devices across thousands of nodes by using an algorithm to calculate where the data is stored and provide the scaling you need. RADOS Block Devices, or RBDs, are thin-provisioned images that can be shared via the rbd kernel module or an iSCSI gateway.

The Champ: The rbd kernel module

Systems running Linux – regardless of whether they’re x86_64, IBM Z or aarch64 – can take advantage of the rbd kernel module for connecting to a Ceph cluster. Windows and VMware systems currently must rely on iSCSI, though work is underway to provide rbd support in Windows.

The kernel-level connection means there’s very little overhead, which provides the best performance between the cluster and nodes consuming the available storage. Benchmarking tests have shown rbd connections are capable of moving data four to 10 times faster than iSCSI in apples-to-apples tests. That is, with the same CPU, RAM and network configurations, you can expect rbd performance to exceed iSCSI.

RBD clients are “intelligent” and have the ability to talk directly to each OSD/device, whereas iSCSI must go through a number of gateways that effectively act as bottlenecks.

When mapped via the rbd kernel module, Ceph RBD images show up in the system’s device list and can be used like any other attached disk. For example, where an attached drive might be /dev/sda, a Ceph image mapped with rbd might show up as /dev/rbd0.

When mapped via the rbd kernel module, Ceph RBD images show up in the system’s device list and can be used like any other attached disk. For example, where an attached drive might be /dev/sda, a Ceph image mapped with rbd might show up as /dev/rbd0.

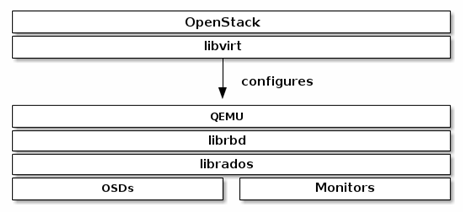

You can take advantage of this connectivity in two ways on a KVM host. The first is to attach one or more RBD images directly to the host machine and then parcel out those storage resources to your VMs and containers using libvirt and QEMU. The second is to directly attach the RBDs to any running Linux VM. Ceph doesn’t support qcow2, but you can configure raw OS device volumes or use the RBD for more generic storage.

OpenStack systems also can consume Ceph RBD images through the same QEMU/librbd kernel module, providing a good option for OpenStack images and instances. Keep in mind that this provides block storage. You can separately attach object storage for OpenStack Swift.

A versatile second: iSCSI

If you’ve used proprietary SAN or NAS devices, you’re probably familiar with iSCSI. It works well across Ethernet fabrics, but relies on a gateway protocol to interpret the flow of data between the storage cluster and the virtual or bare-metal machines that consume it. It supports high-availability via multiple Ceph cluster targets, which can hand off the workload in case of an outage.

With this capability, you can take advantage of the ALUA industry standard defined to enabled multiple active gateways or the MPIO round-robin policy, which allows for scaling and resiliency in the connectivity.

The connections are made via iSCSI initiators, which are available for Linux, Windows and VMware. While fast, iSCSI still must overcome an extra layer of complexity. Performance can be quite good, but if your target is Linux, rbd is the better option. For Windows and VMware, though,iSCSI is currently the only option.

The connections are made via iSCSI initiators, which are available for Linux, Windows and VMware. While fast, iSCSI still must overcome an extra layer of complexity. Performance can be quite good, but if your target is Linux, rbd is the better option. For Windows and VMware, though,iSCSI is currently the only option.

Related Articles

Mar 05th, 2024

Connecting Industrial IoT devices at the Edge

Jul 05th, 2023

No comments yet