Enabling Rapid Decision Making with SUSE Linux Enterprise Server and NVIDIA Virtual GPU (vGPU)

SUSE has been collaborating with NVIDIA to support customers with a combination of NVIDIA’s graphics processing units (GPUs) and virtual GPU (vGPU) software on top of our SUSE Linux Enterprise OS platform.

The NVIDIA GPU Technology Conference (GTC) represents a great opportunity to showcase some of our newer areas of cooperation. In this article, we will discuss how SUSE and NVIDIA enable rapid decision-making with SUSE Linux Enterprise Server and NVIDIA vGPU technology.

NVIDIA vGPU – TL;DR version

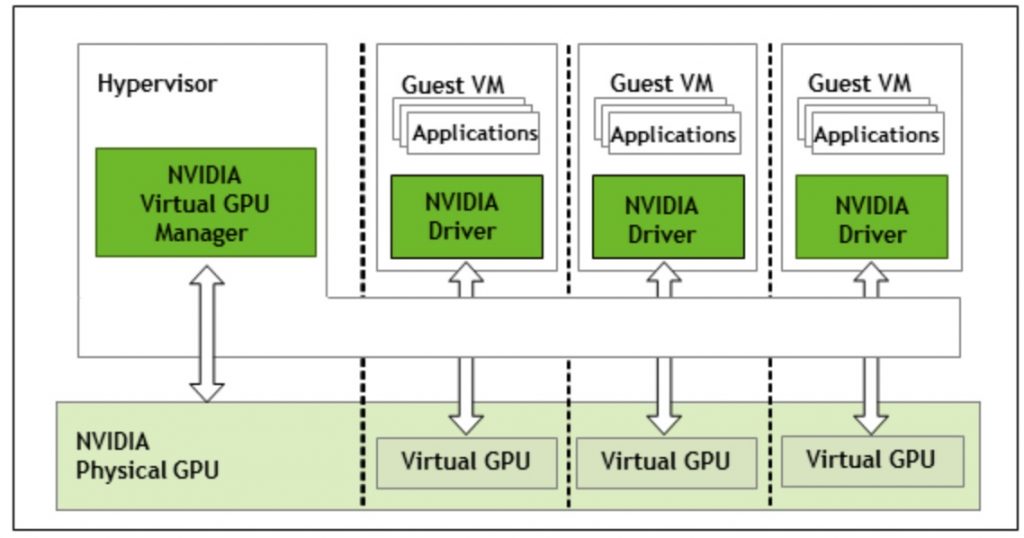

NVIDIA vGPU software creates virtual GPUs that can be shared across multiple virtual machines.

Traditionally, GPUs ,particularly those focused on visualization/graphics performance, were the domain of workstations. With the right GPU in a server deployed in the data center or in the cloud, we can create powerful virtual machines with access to some of the most powerful accelerated computing devices in the market today.

NVIDIA vGPU System Architecture (Source: Virtual GPU Software User Guide v12)

Demand drivers for vGPUs:

Our experience at SUSE shows three major drivers for virtual GPU usage/adoption:

- More applications are leveraging GPUs than ever before – Visual representation and analysis of large amounts of critical data made GPUs a business requirement for both the creative & technical professional (and the original GPU audience) as well as the traditional knowledge worker workloads.

- The right GPU resource per workload type – Workload requirements drive the type of GPU resource needed. Ex: Video editing and data science have different sets of GPU resource requirements. Sometimes an individual may have to perform different functions requiring different types of accelerators. vGPU enables businesses to have access to the right level of GPU resource per workload.

- Transportability – A properly sized and accessible VM environment, whether on-premises or in the cloud, opens the door to new work-type possibilities. Imagine working from your home office while still being able to access a high-end NVIDIA V100 GPU for your data analytics application. Your personal device is no longer the performance bottleneck.

In summary: Access to the right GPU resources, for the right workload, at the right time are key decision drivers for vGPU adoption with SUSE Linux Enterprise users.

SUSE Linux Enterprise Server and KVM Virtualization

SUSE’s focus is and always has been on the delivery of products and solutions built on open-source with worldwide, enterprise-class support. Some highlights relevant to customers looking at deploying NVIDIA GPU and vGPU on SUSE Linux Enterprise Server includes :

- KVM hypervisor is standard with SUSE Linux Enterprise Server – SUSE provides full support for prior versions of SUSE Linux Enterprise Server, OES, NetWare and Windows Server. See section 6.2 of the SUSE Linux Enterprise Server 15 SP2 Virtualization Guide for complete details.

- Fully virtualized guest feature support including (but not limited t0):

- Virtual network and virtual block device hotplugging (with PV drivers – VMDP).

- Virtual CPU over-commitment.

- Dynamic virtual memory size.

- VM save and restore.

- VM live migration.

- VM snapshot.

- PCI passthrough.

SUSE Linux Enterprise Server and NVIDIA vGPU – Our experience

Given SUSE’s enterprise focus, our experience centers around customers looking to deploy business/mission-critical applications on SLES-based KVM virtual machines with NVIDIA vGPU software.

Two particular use cases that reflect our experiences:

- VM-based visualization applications rendering mission-critical services -Example: There are several, distinct applications across different VMs. An interesting requirement is the ability to “swap out” VMs quickly (suspend/resume) so that priority VMs can take precedence over others as needed.

- Cloud/VM-based AI/ML infrastructure – We created KVM-based virtual machines with NVIDIA vGPU and SUSE Linux Enterprise Server 15 SP2. These VMs can be configured to address the different stages of the Data Scientist workflow from training to inference. At GTC, SUSE’s own Liang Yan session, titled “Accelerate your AI Cloud Infrastructure” (S31603), goes into more details regarding our configuration, testing, and results.

Conclusion and Future:

Technical topics we’re exploring at SUSE for vGPU include GPU passthrough on aarch64 (ARM) family of processors, as well as a vGPU plugin in SUSE Manager, our Linux lifecycle management toolset.

SUSE looks forward to its continued collaboration with NVIDIA supporting future vGPU releases. Our goal is to support the next vGPU version that provides long-term support with SUSE Linux Enterprise Server 15 SP3 and its newer version of kvm, libvirt and qemu.

Related Articles

Mar 14th, 2024