Understanding Horizontal Pod Autoscaler

Auto-scaling is a way to automatically increase or decrease the number of computing resources that are being assigned to your application based on resource requirement at any given time. It emerged from cloud computing technology, which revolutionized the way computer resources are allocated, enabling the creation of a fully scalable server in the cloud.

What is the HPA?

HPA or Horizontal Pod Autoscaler is the autoscaling feature for Kubernetes pods. HPA offers the following advantages: economic solution, automatic sizing can offer longer uptime and more availability in cases where traffic on production workloads are unpredictable. Automatic sizing differs from having a fixed amount of pods in that it responds to actual usage patterns and therefore reduces the potential disadvantage of having few or many pods for the traffic load. For example, if traffic is usually less at midnight, a static scale solution can schedule some pods to sleep at night, on the other hand, it can better handle unexpected traffic spikes.

Requirements for HPA

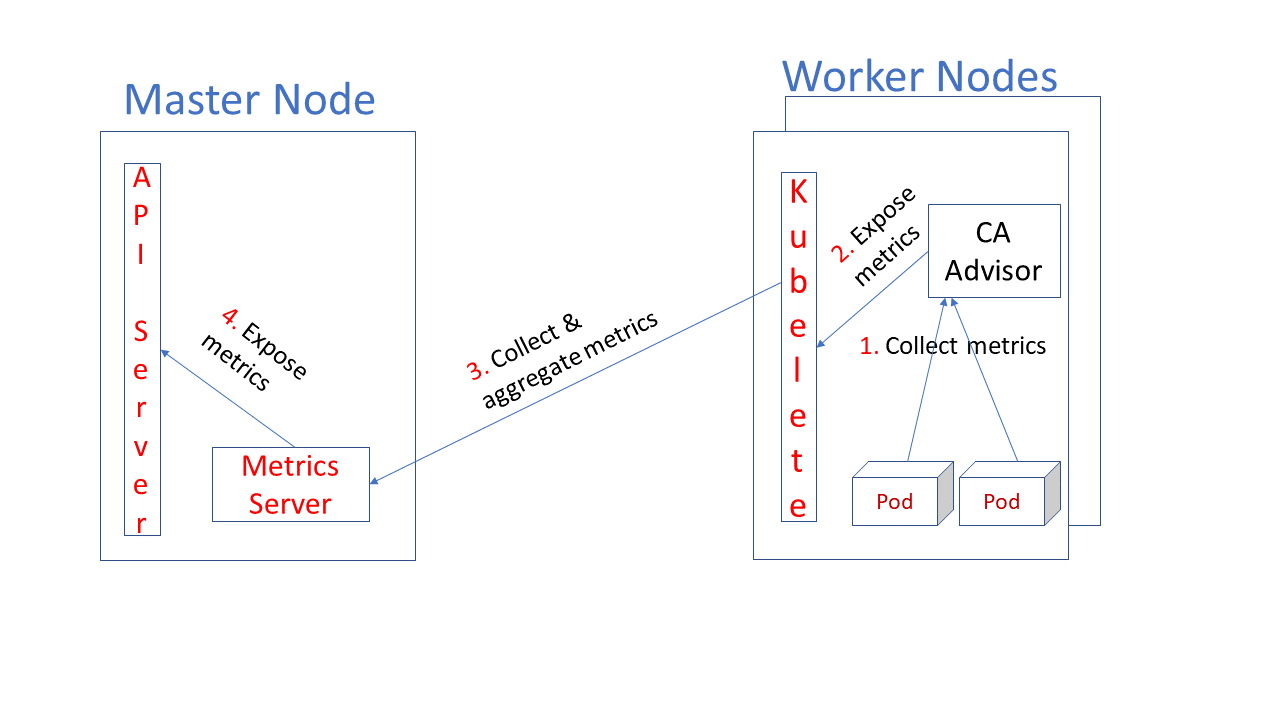

Metrics Server

Metrics Server is a scalable, efficient source of container resource metrics for Kubernetes built-in autoscaling pipelines. it collects resource metrics from Kubelets and exposes them in Kubernetes API server through Metrics API for use by Horizontal Pod Autoscaler. Metrics API can also be accessed by kubectl top, making it easier to debug autoscaling pipelines.

https://github.com/kubernetes-sigs/metrics-server

Metrics Server is not meant for non-autoscaling purposes. For example, don’t use it to forward metrics to monitoring solutions, or as a source of monitoring solution metrics.

Note: With metrics server installed by default in CaaSP 4.2, HPA feature can be used right away.

Validate Metrics-Server installation

After install metrics server the kubectl top command will be available on the cluster to use, this command gets the current metrics of the pods and nodes, if the command not working, review the metrics server installation.

kubectl top node

kubectl top pod

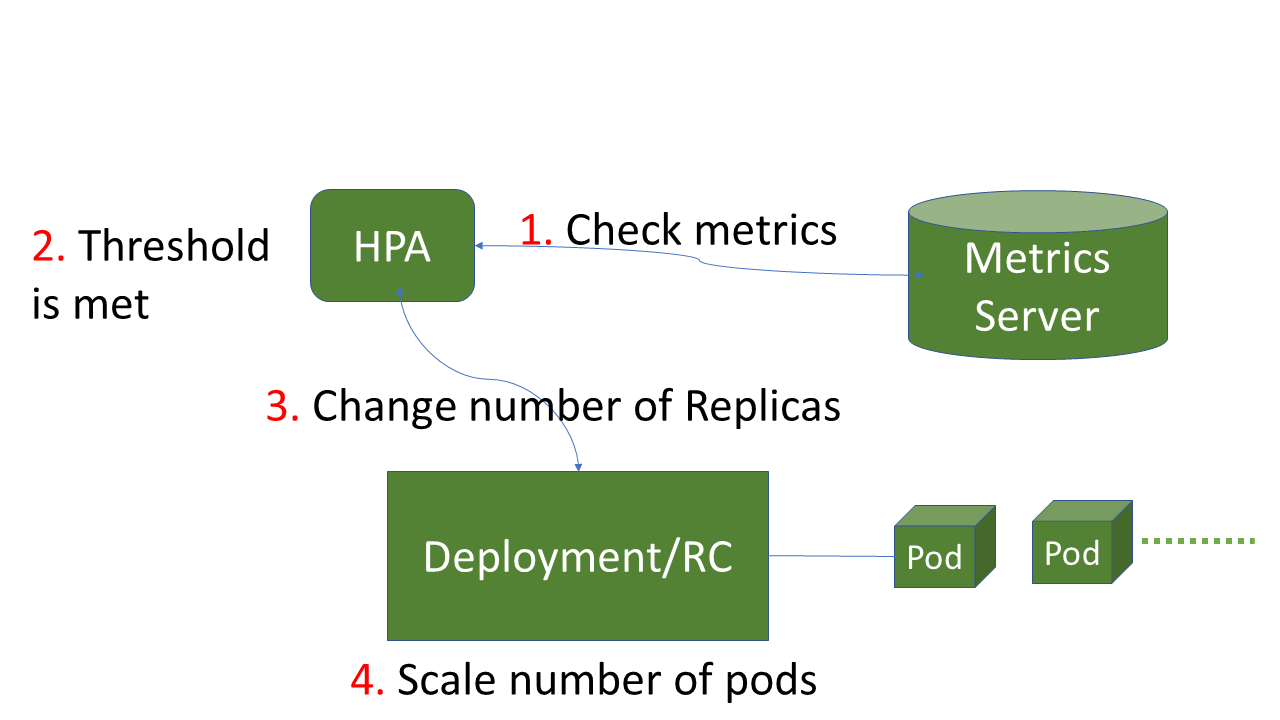

Horizontal Pod Auto-Scaler

HPA is used to automatically scale the number of pods in a replication controller, deployment, replica set, stateful set or a set of them, based on observed usage of CPU, Memory, or using custom-metrics. Automatic scaling of the horizontal pod does not apply to objects that cannot be scaled, for example, DaemonSets.

The Horizontal Pod Autoscaler is implemented as a control loop, with a period controlled by the — horizontal-pod-autoscaler-sync-period flag of the controller manager (with a default value of 15 seconds). During each period, the controller manager consults resource usage based on the metrics specified in each HorizontalPodAutoscaler definition. The controller manager obtains metrics from the Resource Metrics API (for resource metrics per pod) or the Custom Metrics API (for all other metrics).

https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale-walkthrough/

The HPA does this operation below to calculate the number of desired replicas:

desiredReplicas = ceil[currentReplicas * ( currentMetricValue / desiredMetricValue )]

The HPA Manifest:

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: php-apache

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

minReplicas: 1

maxReplicas: 10

metrics:

– type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50

In this example, we scale up the number of replicas on the deployment php-apache when the CPU average of the all running pods of this application is equal or higher than 50%, and decrease the number of the replicas when the CPU Average is less than 50%.

When we use the HPA we need to remove the number of replicas of the deployment, pod, replicaset. Because the number of replicas is set by the HPA Controller.

For scale using the kubectl:

kubectl autoscale deployment php-apache — cpu-percent=50 — min=1 — max=10

For verifying the HPA:

kubectl get hpa php-apache

For describing the HPA:

kubectl describe hpa php-apache

Understanding the complete flow

1. Metrics server takes the aggregated metrics from the current pods and sends them to the kubernetes API when requested

2. The HPA controller checks every 15 seconds by default, and if the values fall within the rule determined in the HPA it increases or decreases the number of pods

3. In the case of scale-up, the kubernetes scheduler will allocate the pods in the nodes that have available resources

4. If the rule is scaledown, the HPA will decrease the number of replicas

TroubleShooting

If the kubectl get hpa command show a status unknown, we need to verify the metrics-server, because the HPA controller cannot getting the metrics

If the pods don’t scale-up, if the kubectl describe pods show the status FailedScheduling nodes not available, we need to verify the Cluster-Autoscaler.

Related Articles

Mar 26th, 2024

SUSE’s WebAssembly report from KubeCon EU

Oct 04th, 2022

No comments yet