Accelerating Secure, Data‑Driven Telco and Cloud Workloads with SUSE on Intel® Xeon® Platforms

Guest blog post authored by:

Tony Dempsey, Director Open-Source Partnerships at Intel Corporation

As telco and cloud infrastructures evolve toward AI‑native, cloud‑native architectures, platforms must deliver security, performance, and flexibility at scale.

Modern workloads from 5G core and RAN functions to confidential computing and real‑time analytics demand more than raw CPU performance. They require hardware‑assisted security, intelligent acceleration, and a tightly integrated software stack.

SUSE Telco Cloud, running on Intel® Xeon® platforms, brings these capabilities together. By combining SUSE’s enterprise-grade Linux and Kubernetes expertise with Intel’s built‑in accelerators and instruction sets, service providers and enterprises can deploy secure, high‑performance, and future‑ready infrastructures for telco, cloud, and edge environments.

This blog explores how key Intel technologies such as Intel Software Guard Extensions (SGX), Intel Data Streaming Accelerator (DSA 2.0), Intel Analytics Accelerator (IAA 2.0), Compute Express Link (CXL 2.0), and Intel Advanced Vector Extensions (AVX2) are supported by SUSE Linux Micro (SL Micro) (the core OS component in the SUSE Telco Cloud stack) and by SUSE Linux Enterprise Server (SLES), and how these Intel technologies translate into real customer value.

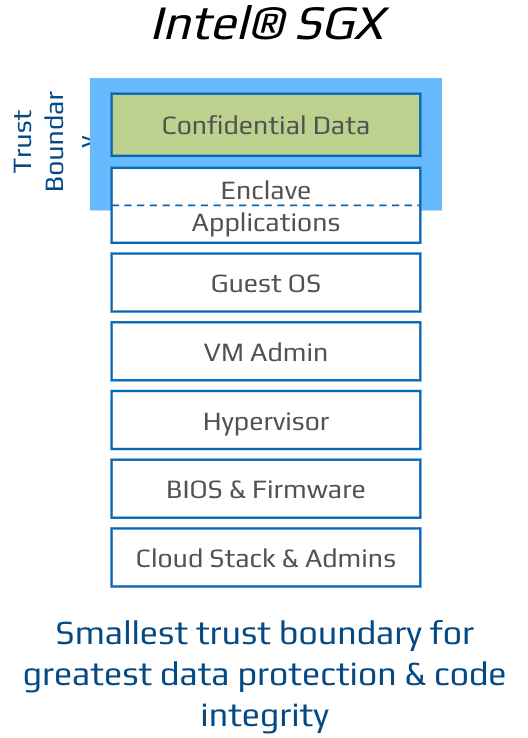

Intel® Software Guard Extensions (SGX): Hardware‑Based Confidential Computing

Intel® Software Guard Extensions (SGX) is a hardware‑based security technology that creates isolated, encrypted memory regions called enclaves. These enclaves protect sensitive code and data even if the operating system or hypervisor is compromised, enabling a true zero‑trust execution model.

For telco and cloud environments handling highly sensitive information—such as subscriber data, cryptographic keys, or regulated workloads—SGX provides strong hardware‑level isolation and protection.

Figure 1: Intel® SGX App Isolation

Customer Benefits

- Zero‑trust data protection: Sensitive data remains encrypted and isolated, even in the event of OS or hypervisor compromise.

- Regulatory compliance: Supports strict data‑handling requirements across financial services, healthcare, and government sectors.

- Enhanced customer trust: Proven hardware‑enforced isolation increases confidence in platform security.

Supported Use Cases

- Secure telco workloads, including 5G Core and DU/CU functions

- Cloud confidential computing

- Financial and regulatory data protection

SUSE Support

Support for Intel SGX was introduced in SL Micro 5.3 and SLES 15 SP4, enabled through a rebase to a more recent mainline kernel, bringing enterprise‑grade support for confidential computing workloads.

Intel® Data Streaming Accelerator (DSA 2.0): High‑Performance Data Movement

Intel® Data Streaming Accelerator (DSA 2.0) is a dedicated hardware engine designed to offload high‑volume data movement and transformation tasks from the CPU. By accelerating operations such as memcpy, memcmp, memset, CRC, and data validation, DSA improves throughput while reducing latency and CPU overhead.

In data‑intensive telco and cloud pipelines, DSA helps eliminate bottlenecks caused by memory‑to‑memory operations.

Figure 2: Abstracted Internal Block Diagram of Intel® DSA

Customer Benefits

- Reduced system bottlenecks: Offloads data movement tasks, improving overall system efficiency.

- Predictable low latency: Ensures smoother pipeline execution for networking, storage, and analytics workloads.

- Optimized CPU utilization: Frees CPU cores for revenue‑generating applications instead of housekeeping tasks.

Supported Use Cases

- Packet processing in telco and cloud infrastructure

- Storage acceleration for block and file systems

- Data analytics pipelines

SUSE Support

Intel DSA 2.0 is enabled in SL Micro 6.0 and SLES 15 SP6, leveraging the kernel 6.4 baseline for full accelerator support.

Intel® Analytics Accelerator (IAA 2.0): Faster Analytics and Compression

Intel® Analytics Accelerator (IAA 2.0) is a built‑in hardware accelerator that speeds up compression, decompression, and database analytic operations. By offloading compute‑intensive functions—such as scanning, filtering, and data transformation—from the CPU, IAA delivers higher throughput and lower latency for analytics‑driven workloads.

This capability is particularly valuable for AI, fraud detection, and large‑scale log or telemetry processing.

Figure 3: Intel® IAA Plugin for RocksDB Storage Engine – RocksDB Architecture

Customer Benefits

- Faster real‑time analytics: Accelerates compression, decompression, and pattern matching in databases.

- Lower storage and memory costs: Efficient compression reduces memory footprint and infrastructure overhead.

- Accelerated insight‑to‑action: Enables faster query responses for analytics, AI inference, and operational intelligence.

Supported Use Cases

- Machine learning inference in cloud and edge environments

- Database acceleration for analytics workloads

- HPC workloads requiring vectorized data processing

SUSE Support

Intel IAA was introduced as a technology preview in SLES 15 SP7 and is now fully supported in SL Micro 6.2 and SLES 16.0, providing production‑ready analytics acceleration.

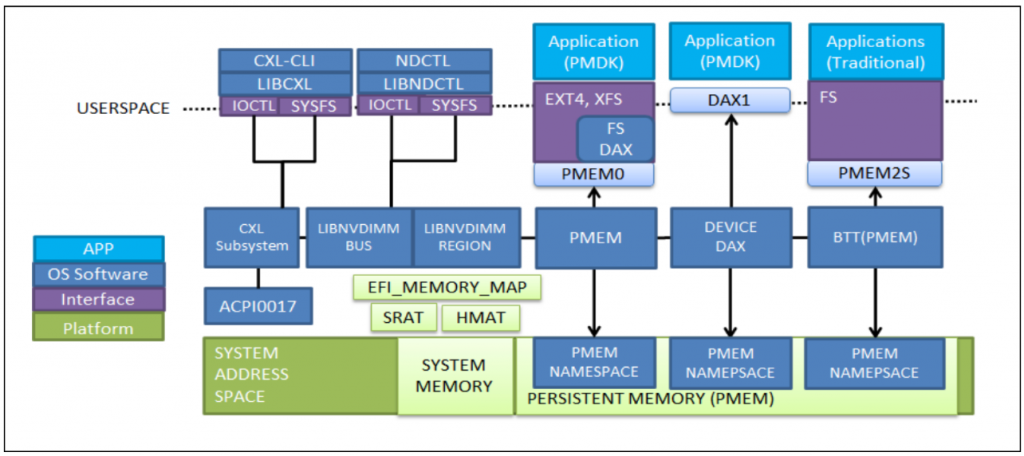

Compute Express Link (CXL 2.0): Memory and Accelerator Expansion

Compute Express Link (CXL) 2.0 is a high‑speed, low‑latency interconnect standard that enables memory expansion and memory pooling across servers. CXL allows CPUs, accelerators, and memory devices to share resources coherently, improving utilization and reducing performance bottlenecks.

For telco, cloud, and AI workloads dealing with large datasets, CXL introduces a new level of architectural flexibility.

Figure 4: Basic Linux CXL PMEM Architecture

Customer Benefits

- Greater system flexibility: Add memory or accelerators on demand without full system upgrades.

- Higher workload density: Expand memory pools to support more VMs, containers, or analytics workloads per server.

- Improved performance consistency: Reduce memory bottlenecks for real‑time and data‑intensive applications.

Supported Use Cases

- Memory pooling for cloud workloads

- Accelerator offload using GPUs or FPGAs

- Telco DU and CU workload optimization

SUSE Support

CXL 2.0 is enabled in SL Micro 6.2 and SLES 15 SP7, with functionality delivered by backporting capabilities from kernel 6.10.

Intel® Advanced Vector Extensions (AVX2): Vectorized Performance at Scale

Intel® Advanced Vector Extensions (AVX2) is a CPU instruction set that accelerates math‑intensive and data‑parallel workloads using 256‑bit vector processing. By processing more data per clock cycle, AVX2 delivers higher throughput and improved energy efficiency.

AVX2 is foundational for many modern workloads, including AI inference, RAN signal processing, analytics, and media encoding.

Customer Benefits

- Accelerated data and signal processing: Improves performance for AI inference, RAN, and media workloads.

- Higher application efficiency: Enables more work per CPU cycle, reducing processing time.

- Improved energy efficiency: Faster execution lowers total CPU cycles and power consumption.

Supported Use Cases

- HPC and AI workloads

- Video encoding and scientific simulations

- Database compression and analytics

SUSE Support

Intel AVX2 support was introduced with the Intel Haswell microarchitecture and has been available since SL Micro 5.0 and SLES 11 SP3, making it a long‑standing and mature capability within SUSE Linux Enterprise.

Building the Foundation for AI‑Native Telco and Cloud

By tightly integrating Intel’s hardware security features, accelerators, and instruction sets with SUSE Telco Cloud, organizations gain a platform that is secure by design, performance‑optimized, and ready for AI‑native workloads.

From confidential computing with SGX to data movement and analytics acceleration with DSA and IAA, memory expansion with CXL, and vectorized performance with AVX2, SUSE and Intel together enable service providers and enterprises to:

- Protect sensitive data with hardware‑enforced security

- Maximize infrastructure efficiency and CPU utilization

- Scale analytics, AI, and telco workloads with confidence

Whether you are deploying 5G in dense urban zones, powering edge AI workloads, or modernizing legacy RAN, this is the foundation that keeps pace with your ambitions. It’s not just infrastructure, it’s the enabler for what’s next: smarter networks, faster deployment, and future-proof operations.

Ready to turn your telco and cloud vision into reality? Contact your Intel and SUSE account teams to schedule a sizing workshop.

Guest author:

Tony Dempsey is an experienced Telecom professional with 18 years of Telco experience prior to joining Intel and has worked with a number of Network Operators in Europe, Caribbean, Central and South America. During this time Tony has held a variety of managerial, development and directorate roles from product development, project and program management for the delivery of a wide range of network operator infrastructure and data services. Tony established Intel’s multi-award-winning Intel Network Builders ecosystem program of 350+ partner companies and today leads Intel’s OSV enablement activities within Intel’s Network and Edge division.

Tony Dempsey is an experienced Telecom professional with 18 years of Telco experience prior to joining Intel and has worked with a number of Network Operators in Europe, Caribbean, Central and South America. During this time Tony has held a variety of managerial, development and directorate roles from product development, project and program management for the delivery of a wide range of network operator infrastructure and data services. Tony established Intel’s multi-award-winning Intel Network Builders ecosystem program of 350+ partner companies and today leads Intel’s OSV enablement activities within Intel’s Network and Edge division.

Related Articles

Apr 26th, 2024

Exploring SUSE Edge 3.0: What’s new?

Oct 25th, 2024

Live Patching and Immutable System

Jan 22nd, 2025