Why AI Security Starts at the OS Level: SUSE’s Role in Protecting AI Models and Data

Artificial intelligence is reshaping how enterprises deliver value, from accelerating customer service with generative models to optimizing global supply chains. But as organizations race ahead, one fact is often overlooked: AI is only as secure as the foundation it runs on.

While enterprises invest heavily in firewalls, Kubernetes security policies, and model guardrails, attackers often aim lower in the stack—at the operating system, where vulnerabilities are harder to detect but easier to exploit. A compromised OS can give adversaries broad access to sensitive data, model artifacts, and even entire clusters.

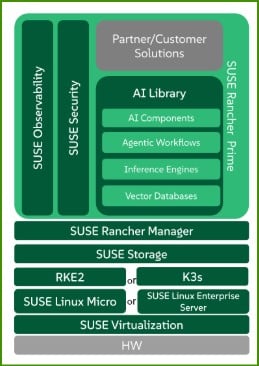

That’s why AI security starts at the OS level. And it’s also why SUSE developed SUSE AI and the SUSE AI Suite—an integrated offering that brings together the trusted Linux foundation of SUSE with Kubernetes orchestration, observability, storage, and runtime security. The suite is designed to protect data pipelines, AI models, and workloads from edge to core to cloud.

1. The Operating System as the First Security Perimeter

Every AI workload touches the OS. From GPU scheduling to memory management, the OS is responsible for the most fundamental processes that power training and inference. If the OS is compromised, attackers gain entry long before application or container-level controls come into play.

The SUSE AI Suite addresses this challenge at the foundation by providing a secure OS:

- SUSE Linux Enterprise (SLES) delivers a hardened kernel, secure boot, and FIPS-certified cryptography—meeting compliance needs in highly regulated industries, such as finance, healthcare, and government.

- SUSE Linux Micro, delivers lightweight, immutable Linux OS ideal for edge AI deployments where reliability and zero-trust security are paramount.

With these operating system options, SUSE ensures that whether you’re running large training clusters in the cloud or inferencing at the edge, your workloads start with a secure, compliant base.

2. Protecting AI Data Pipelines at Rest and in Motion

AI models are only as good as the data that trains them. These datasets—customer transactions, patient records, or intellectual property—move across OS-managed pipelines before they ever reach a model.

The SUSE AI Suite integrates multiple components to safeguard this journey:

- SUSE Storage provides secure, high-performance, and encrypted storage for training and inference workloads.

- Kernel-level access controls in SUSE Linux Enterprise Server and SUSE Linux Micro enforce least-privilege principles for sensitive datasets.

- Secure connectivity across hybrid and multi-cloud environments is orchestrated through RKE/K3s and managed by the Rancher Management Server.

Example: A pharmaceutical company training models on proprietary drug research can encrypt training data with SUSE Storage, enforce data access policies via SUSE Linux Enterprise Server, and maintain data sovereignty across cloud regions with SUSE Rancher.

3. Guarding AI Models Against Tampering

The models themselves—trained weights, embeddings, and fine-tuned architectures—are valuable intellectual property. If attackers alter or steal these, the consequences range from financial loss to biased or unsafe outcomes in production.

Within the SUSE AI Suite, multiple layers protect models:

- SUSE Linux Enterprise Server ensures only trusted binaries and model artifacts are executed, thanks to secure boot and integrity verification.

- Confidential computing support isolates model execution, preventing unauthorized access to in-memory weights.

- RKE/K3s with SUSE Rancher Prime provides controlled deployment, so only authorized workloads run on approved infrastructure.

Consider a retailer deploying recommendation models across hundreds of stores. If model artifacts are tampered with, fraud could be introduced at scale. By verifying binaries and restricting deployment through SUSE Rancher, SUSE AI Suite ensures that only authentic, tamper-proof models reach production.

4. Observability and Real-Time Threat Detection

AI workloads are dynamic and resource-intensive, which makes observability essential for detecting anomalies. Without visibility at the OS and infrastructure layers, organizations cannot detect attacks until damage is already done.

SUSE AI Suite integrates SUSE Observability and SUSE Observability Platform Optimization to deliver:

- GPU utilization and tracing to spot unusual consumption patterns.

- Token-level monitoring to identify abnormal inference requests.

- Proactive anomaly dashboards integrated with security operations.

When combined with SUSE Security, enterprises gain runtime defense with deep network inspection, vulnerability scanning, and zero-trust segmentation for AI workloads.

Example: Financial services provider FIS-ASP uses SUSE Observability dashboards to trace GPU workloads supporting SAP environments, ensuring compliance while detecting anomalies in real time.

5. Securing Hybrid and Edge AI Deployments

AI is no longer confined to centralized data centers. Manufacturers, telcos, and retailers increasingly deploy models at the edge for low-latency inference. But distributed environments bring new security challenges.

SUSE addresses these with:

- SUSE Linux Micro for secure, minimal-footprint edge deployments

- K3s, a lightweight Kubernetes distribution for constrained environments

- SUSE Virtualization (HCI) for flexible, on-premise AI workloads

Policies defined in SUSE Rancher extend seamlessly across cloud, core, and edge, ensuring that security posture remains consistent no matter where models are deployed.

Example: A manufacturer can deploy computer vision models to factory-floor devices running SUSE Linux Micro and manage them centrally through SUSE Rancher, with unified observability and enforcement.

6. Lay the Groundwork for Ethical, Trusted AI

Discussions around AI ethics—bias, explainability, and governance—often start at the application layer. But if the foundation is weak, even the best guardrail technologies cannot be trusted. OS-level assurance ensures that governance frameworks remain intact and tamper-proof.

The SUSE AI Suite strengthens ethical AI initiatives by:

- Ensuring data lineage is preserved through secure pipelines.

- Guaranteeing that only verified models are deployed, supporting transparency.

- Providing runtime observability to audit and validate outputs.

By combining infrastructure security with ethical AI guardrails, SUSE helps organizations deliver responsible, trustworthy AI that regulators, customers, and partners can rely on.

SUSE AI Suite: A Unified Approach to Securing AI

SUSE AI Suite brings all the essential components together in one integrated offering:

Layer |

Capability |

OS Foundation |

SUSE Linux Enterprise + Linux Micro |

Kubernetes Orchestration |

RKE / K3s with Rancher |

Observability |

SUSE Observability + Platform Optimization |

Storage |

Encrypted SUSE Storage |

Security |

SUSE Security powered runtime defense |

Virtualization |

SUSE Virtualization (HCI) for hybrid/edge AI |

Simple, Flexible Licensing Options

SUSE AI Suite simplifies adoption with two enterprise-friendly models:

- vCPU Metric for Cloud: Based on cores or vCPUs assigned to worker nodes

- Socket Metric for Bare Metal: Supports up to 64 cores per socket

This flexibility removes complexity and aligns with your infrastructure footprint—letting you focus on securing your AI, not decoding licenses.

Final Thoughts

AI security does not begin at the application layer or the Kubernetes cluster. It begins at the operating system—the bedrock that governs how data is stored, processed, and protected. Without a trusted OS, higher-level controls cannot deliver on their promises.

But in modern enterprises, the OS is only the starting point. Protecting AI models and data requires an integrated approach that spans observability, Kubernetes management, storage, and runtime security.

That is exactly what the SUSE AI Suite delivers. Combining SUSE Linux Enterprise and SUSE Linux Micro, SUSE Rancher Prime (RKE/K3s), SUSE Observability, SUSE Storage, SUSE Security, and SUSE Virtualization (HCI), the suite provides a complete foundation for trusted AI adoption. Available with simple licensing metrics for cloud and bare-metal, it removes complexity while strengthening compliance and resilience.

With SUSE AI and the SUSE AI Suite, enterprises can:

- Secure data pipelines at rest, in motion, and at the edge.

- Ensure models remain tamper-proof and auditable.

- Detect and respond to threats in real time.

- Deploy AI with confidence across multi-cloud, on-prem or air-gapped environments.

👉 Ready to secure your AI infrastructure from the ground up?

Explore how SUSE AI and the SUSE AI Suite provide the hardened foundation, observability, and control you need to build and scale AI securely—anywhere. Visit https://www.suse.com/products/ai/

Related Articles

Nov 18th, 2025