Why AI Still Cannot Run Your Linux Infrastructure (And What Must Change)

AI assistants are rapidly becoming part of how teams write code, analyze data and automate workflows. Yet when it comes to operating a real enterprise Linux environment, AI still falls short.

Today, Linux is the foundation that runs AI workloads across data centers and clouds. We trust Linux to execute the most advanced AI systems in the world. Yet we do not trust AI to operate the very environments that make those systems possible.

This contrast highlights the real challenge: the limitation is not AI itself, but how to connect AI to mission-critical infrastructure and ensure business continuity while keeping operations safe, secure, and under governance control. Ultimately, the “AI-ready” concept must evolve from Linux running AI to AI-assisted operations.

The reason is not a lack of intelligence in Large Language Models (LLMs). The problem is the environment they are expected to operate in and the critical need to ensure we can control that interaction.

Real data centers are not greenfield labs. They are heterogeneous estates made of different Linux distributions, versions, legacy systems, compliance constraints and strict security requirements. This complexity is exactly what makes enterprise Linux reliable, but it also makes it nearly impossible for AI assistants to interact with infrastructure in a safe and meaningful way.

If AI is to become a true operations tool, something fundamental must change.

The missing layer between LLMs and infrastructure

Today, most AI demonstrations assume a simplified environment: a single platform, modern tooling and limited security boundaries. Enterprise reality looks very different.

For an AI assistant to safely operate Linux systems, four conditions must be met:

- It must understand heterogeneous environments, not a single distribution

- It must operate through a trusted and auditable management layer

- It must respect enterprise security, identity and software supply chain requirements

- It must keep humans in the loop to ensure business continuity

This is where the Model Context Protocol (MCP) becomes important.

MCP provides a standard way for AI agents to interact with real systems through defined tools, rather than through brittle scripts or unsafe direct access. It is an emerging foundation for what many call agentic AI, where AI can take controlled and meaningful actions.

But standards alone are not enough. They must be implemented where real infrastructure is managed.

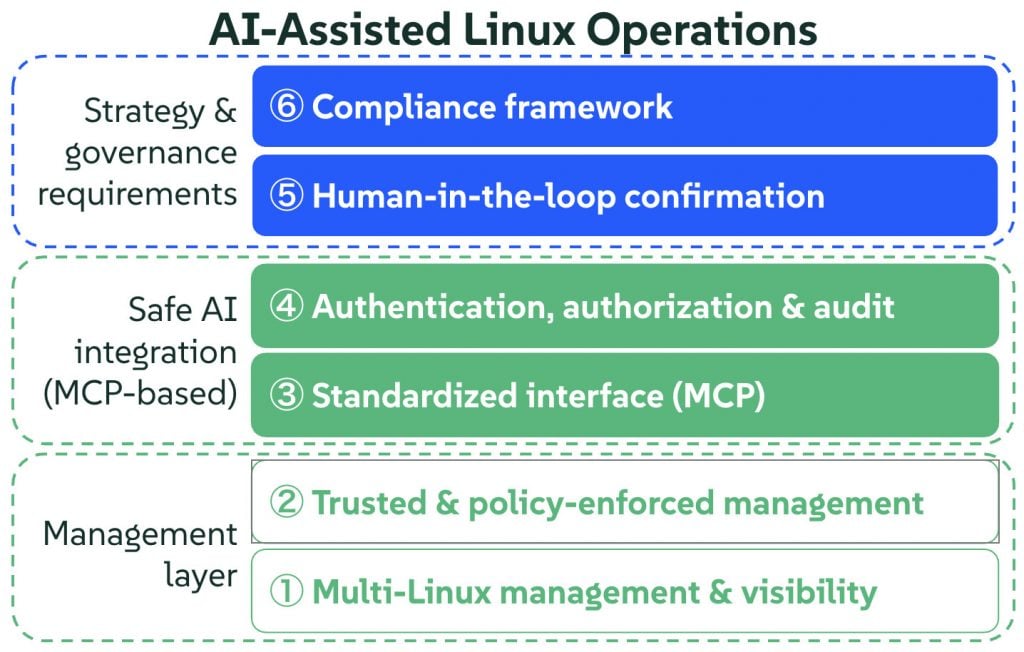

Layered architecture for AI-assisted operations in enterprise Linux, combining multi-Linux management, MCP-based safe AI integration, authorization and audit controls, human-in-the-loop confirmation, and compliance alignment.

From the chatbot to AI-assisted operations

Most current AI use in IT resembles an advanced chatbot. It provides answers, suggestions and summaries based on user input. While useful, this approach remains limited for real infrastructure operations.

- It provides blind answers instead of context-aware analysis

- It offers reactive visibility instead of real-time visibility at scale

- It relies on limited human input instead of direct interaction with IT systems

- It assumes the state of systems instead of being able to correlate events

For AI to become a true operations tool, it must move beyond the chatbot model and integrate directly with the platforms that manage infrastructure.

This is where AI-assisted operations become fundamentally different.

By interacting through enterprise Linux management layers, AI can access live system context, perform audits, suggest actions and operate within existing security and policy boundaries.

Why Linux operations is the real frontier for AI

Linux is the backbone of enterprise workloads. It runs SAP systems, databases, cloud platforms, retail infrastructure and industrial control systems. Yet Linux operations today remain heavily manual, policy-driven and fragmented across distributions.

This is where AI can provide enormous value, if it can safely integrate into existing operational models.

The challenge is not teaching AI about Linux. The challenge is enabling AI to work through the tools that already manage Linux at scale.

The role of multi-Linux management

In most organizations, Linux management is already centralized through enterprise tools that handle lifecycle management, patching, compliance, onboarding and auditing across different Linux distributions and versions.

This management layer becomes the natural bridge between LLMs and real infrastructure.

Modern Linux platforms such as SUSE Linux leverage AI-assisted operations within the OS itself to accelerate troubleshooting and system-level analysis. The true value, however, appears when AI-assisted operations extend across heterogeneous fleets, from data center to edge, through centralized multi-Linux management across the entire landscape. Both approaches are complementary and will coexist, interacting to optimize operations at different levels.

By exposing these capabilities through MCP-compatible interfaces, AI assistants can:

- Onboard systems

- Audit patch status

- Identify vulnerabilities

- Enforce policies

- Operate within existing identity and security models

All without replacing existing systems or disrupting established environments.

This is modernization without compromise.

Trust is not optional for AI-assisted operations

For AI to operate infrastructure, trust in the software supply chain becomes critical.

Container provenance, image signing, vulnerability scanning, identity federation and auditable actions are no longer optional best practices. They are prerequisites for allowing AI agents to interact with production systems.

This is why placing MCP implementations inside trusted registries and enterprise-grade management platforms is so important. AI-assisted operations must inherit the same trust model as the infrastructure they manage.

Humans must remain in the loop

AI operations can accelerate management, improve visibility and deliver context-aware insights at scale, making IT environments easier to maintain and more trustworthy. This, however, does not mean bypassing human oversight.

A human-in-the-loop AI strategy is essential when running mission-critical systems. AI-driven operations must be supervised and should never modify systems without explicit confirmation.

When a wrong decision can lead to downtime, human validation becomes critical for business resiliency.

AI-assisted operations is the key idea: AI does not replace humans, it empowers them.

A broader ecosystem is forming

The emergence of the Model Context Protocol and the work of the Agentic AI Foundation (AAIF), part of the Linux Foundation, signal that the industry recognizes this challenge. A common standard is forming around how AI agents should interact with real systems.

Vendors, open source projects and enterprise platforms are beginning to align around this idea: AI must not bypass operations. It must integrate into them.

Where SUSE fits in this evolution

SUSE’s focus on multi-Linux management, software supply chain trust and secure lifecycle operations places it naturally at the center of this evolution. As an active participant in the ecosystems forming around the Model Context Protocol and agentic AI standards, SUSE is contributing to how these concepts become practical for real infrastructure. By distributing MCP capabilities through the SUSE Registry, we are ensuring that AI agents inherit a trusted provenance.

Our approach provides the governance hooks necessary to support human oversight for AI-assisted actions, whether it is a system reboot or a security patch, before they touch a production environment.

By enabling MCP-based interaction through SUSE Linux at the OS level for deeper integration, through SUSE Multi-Linux Manager for heterogeneous management at scale, and by distributing these capabilities through the SUSE Registry, SUSE is helping turn MCP from a concept into something that can operate in real environments today.

This is not about future AI visions. It is about making AI useful for the Linux environments organizations already run.

You can see a concrete example of this approach in our recent release of the SUSE Multi-Linux Manager MCP server, now available in the SUSE Registry. Here for more information.

The future of Linux operations is conversational, automated and secure

We are approaching a point where Linux operations will no longer be driven only by dashboards and scripts, but also by conversational interactions with AI agents operating through trusted management platforms.

When that happens, the role of standards like MCP, ecosystems like AAIF and platforms capable of managing heterogeneous Linux environments will become critical.

AI will not replace operations teams. It will augment them, provided it can operate safely within the complexity of real enterprise Linux.

That is the change now underway.

Related Articles

Nov 27th, 2024