SIGKILL vs SIGTERM: A Developer’s Guide to Process Termination

As developers, managing how your applications shut down or handle interruptions is crucial, especially when dealing with Linux systems, containers or Kubernetes. Understanding the different signals that can terminate a process is essential for maintaining smooth and predictable application behavior.

In this blog, we’ll explore two of the most important signals—SIGKILL and SIGTERM. We’ll break down what each signal does, how they differ and why it matters in real-world scenarios. Whether you’re debugging an unresponsive application or managing containers in a complex Kubernetes environment, knowing when and how to use these signals will help you keep your systems running efficiently and gracefully.

Let’s dive into the details and see how mastering these signals can improve your process management and overall application stability.

The basics of Unix Signals

Before we dive into the specifics of SIGKILL and SIGTERM, let’s briefly review the concept of signals in Unix-like operating systems.

Signals are like messages for programs. They are software interrupts sent to a program to indicate that an important event has occurred. The events can range from user requests to exceptional runtime occurrences. Each signal has a name and a number, and there are different ways to send them to a program.

Here’s a quick rundown of some key signals you might encounter:

- SIGHUP (signal 1): Hangup

- SIGINT (signal 2): Interrupt (usually sent by Ctrl+C)

- SIGQUIT (signal 3): Quit

- SIGKILL (signal 9): Kill (cannot be caught or ignored)

- SIGTERM (signal 15): Termination signal

- SIGSTOP (signal 19): Stop the process (cannot be caught or ignored)

SIGTERM vs SIGKILL: An In-depth Comparison

Now, let’s focus on the two signals that are most commonly used for process termination: SIGKILL and SIGTERM.

SIGTERM: The Polite Request

SIGTERM (signal 15) is the default signal sent by the kill command. It’s designed to be a gentle request asking the process to terminate gracefully. SIGTERM is the preferred way to end a process because it allows the program to shut down gracefully, saving data and releasing resources properly.

When a process receives SIGTERM:

- It can perform cleanup operations

- It has the opportunity to save its state

- It can close open files and network connections

- Child processes are not automatically terminated

Example of sending SIGTERM:

kill <PID>

# or explicitly

kill -15 <PID>

SIGKILL: The Forceful Termination

SIGKILL (signal 9) is the nuclear option for terminating a process. SIGKILL is used as a last resort when a process is unresponsive to SIGTERM or when you need to stop a process immediately without any delay.

When you use kill -9 or send SIGKILL:

- The process is immediately terminated

- No cleanup operations are performed

- The process has no chance to save its state

- Child processes become orphans and was adopted by the init process

Example of sending SIGKILL:

kill -9 <PID>

Linux SIGKILL and Signal 9

In Linux, SIGKILL is represented by the number 9. When you see references to “signal 9” or “interrupted by signal 9 SIGKILL,” it’s referring to this forceful termination signal. It’s important to note that while SIGKILL is guaranteed to stop the process, it may leave the system in an inconsistent state due to the lack of cleanup.

This can lead to:

- Corrupted files

- Leaked resources

- Orphaned child processes

- Incomplete transactions

Can you catch SIGKILL?

One crucial difference between SIGTERM and SIGKILL is that SIGKILL cannot be caught, blocked or ignored by the process. This makes it a reliable way to terminate stubborn processes, but it also means that the process cannot perform any cleanup operations.

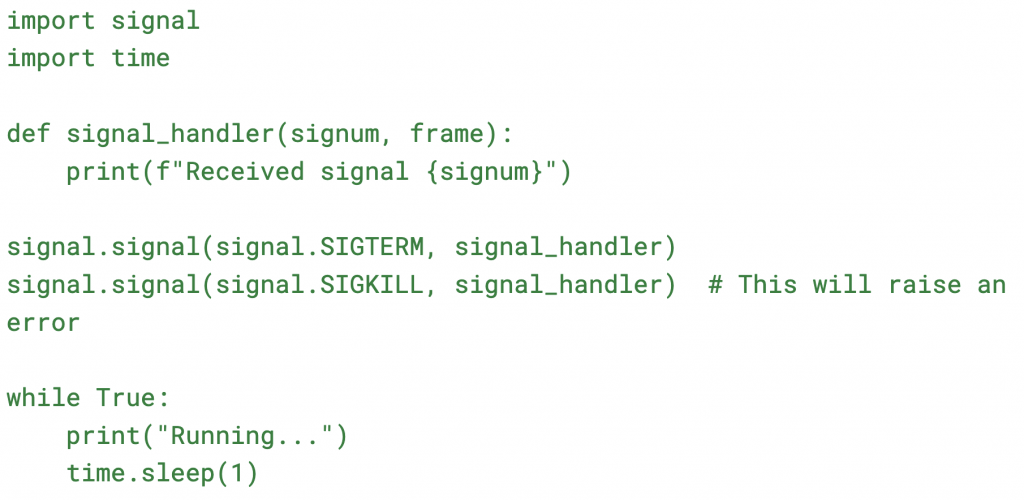

Here’s a simple Python example demonstrating that SIGKILL cannot be caught:

If you run this script and try to send SIGTERM and SIGKILL, you’ll see that SIGTERM can be caught and handled, while SIGKILL cannot.

Docker and Kubernetes: SIGTERM and SIGKILL in containerized environments

Understanding how SIGTERM and SIGKILL work becomes even more critical in containerized environments like Docker and Kubernetes.

In Docker

Docker uses a combination of SIGTERM and SIGKILL for graceful container shutdown:

- When you run docker stop, Docker sends an SIGTERM to the main process in the container.

- Docker then waits for a grace period (default 10 seconds) for the process to exit.

- If the process doesn’t exit within the grace period, Docker sends a SIGKILL to forcefully terminate it.

You can adjust the grace period when stopping a container:

docker stop –time 20 my_container # Wait for 20 seconds before sending SIGKILL

It’s crucial to design your containerized applications to handle SIGTERM properly to ensure graceful shutdowns.

In Kubernetes

Kubernetes follows a similar but more complex pattern when terminating pods:

- The Pod’s status is updated to “Terminating”.

- If the Pod has a preStop hook defined, it is executed.

- Kubernetes sends SIGTERM to the main process in each container.

- Kubernetes waits for a grace period (30 seconds by default, but configurable).

- If containers haven’t terminated after the grace period, Kubernetes sends SIGKILL.

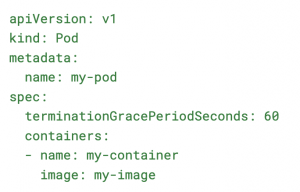

Here’s an example of how to set a custom termination grace period in a Kubernetes Pod specification:

This configuration gives the Pod 60 seconds to shut down gracefully before being forcefully terminated.

Task exited with return code NEGSIGNAL.SIGKILL

If you encounter an error message like “task exited with return code NEGSIGNAL.SIGKILL” or simply “NEGSIGNAL.SIGKILL,” it typically means that the process was terminated by an SIGKILL signal.

This could be due to:

- The process being forcefully terminated by an administrator or the system

- The process exceeding resource limits (e.g., memory limits in a containerized environment)

- A timeout being reached in a containerized environment (e.g., Docker’s stop timeout or Kubernetes’ termination grace period)

When debugging such issues, consider the following:

- Check system logs for any out-of-memory errors

- Review your application’s resource usage

- Ensure your application handles SIGTERM properly to avoid SIGKILL

- In containerized environments, check if the allocated resources and grace periods are sufficient

Best practices for developers

To ensure your applications behave well in various environments and can be managed effectively, follow these best practices:

- Handle SIGTERM gracefully:

- Implement signal handlers to catch SIGTERM

- Perform necessary cleanup operations

- Save important state information

- Close open files and network connections

- Design for quick shutdowns:

- Aim to complete shutdown procedures within a reasonable timeframe (e.g., less than 30 seconds for Kubernetes environments)

- Use timeouts for long-running operations during shutdown

- Use SIGKILL sparingly:

- Only resort to SIGKILL when absolutely necessary

- Be aware of the potential consequences of forceful termination

- Implement proper logging:

- Log the receipt of termination signals

- Log the steps of your shutdown process

- This helps in debugging and understanding the application’s behavior during termination

- Test termination scenarios:

- Simulate SIGTERM in your testing environments

- Verify that your application shuts down gracefully

- Test with different timing scenarios (e.g., during database transactions)

- Monitor for unexpected terminations:

- Set up alerts for SIGKILL terminations

- Investigate the root cause of any unexpected, forceful terminations

- In containerized environments:

- Ensure your application can be shut down within the allocated grace period

- Consider implementing liveness and readiness probes in Kubernetes to help manage the application lifecycle

- Handle child processes:

- Ensure parent processes properly manage the termination of child processes

- Consider using process groups for easier management of related processes

Advanced Considerations

Signal Propagation in Process Groups

In Unix-like systems, signals are typically sent to individual processes. However, you can also send signals to process groups. This is particularly useful when dealing with parent-child process relationships.

The kill command can target process groups by prefixing the process ID with a minus sign:

kill -TERM -<PGID> # Sends SIGTERM to all processes in the process group

This can be useful in scripts or applications that need to manage multiple related processes.

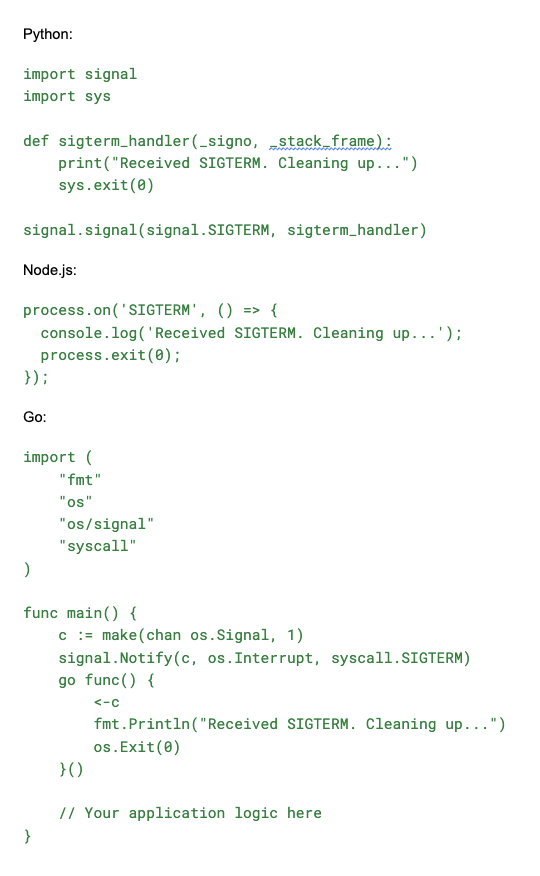

Handling SIGTERM in Different Programming Languages

Different programming languages have various ways of handling signals.

Here are a few examples:

SIGTERM vs SIGINT

While this article focuses on SIGTERM and SIGKILL, it’s worth mentioning SIGINT (signal 2), which is typically sent by pressing Ctrl+C in a terminal.

SIGINT is similar to SIGTERM in that it can be caught and handled, but it’s generally used for user-initiated interrupts rather than system-managed terminations.

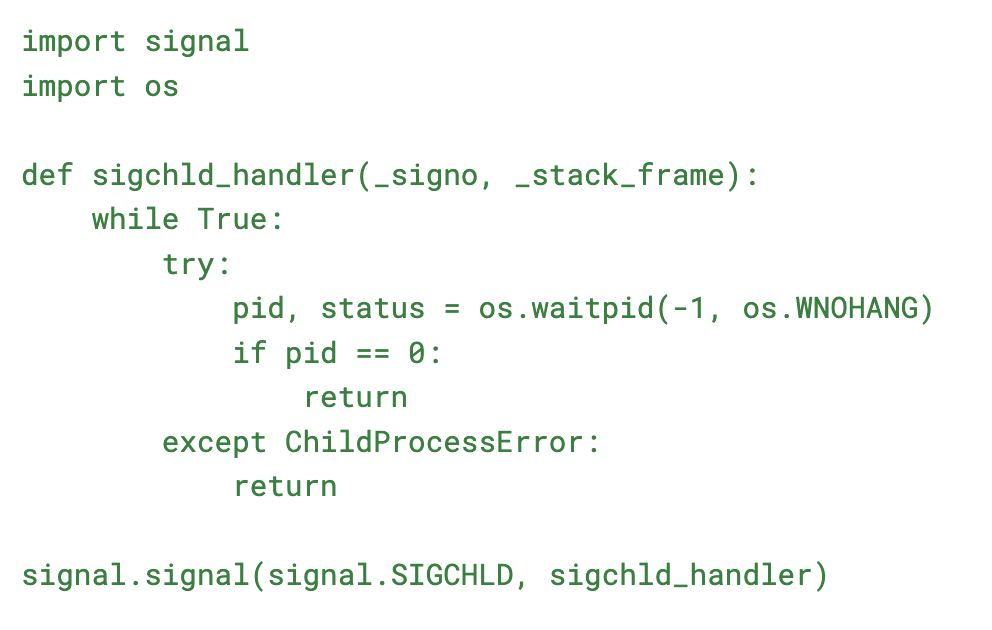

Zombie Processes and SIGCHLD

When discussing process termination, it’s important to understand zombie processes.

A zombie process is a process that has completed execution but still has an entry in the process table. This happens when a child process terminates, but the parent process hasn’t yet called wait() to read its exit status.

Proper handling of SIGCHLD (signal sent to a parent process when a child process dies) can help prevent zombie processes:

Conclusion

Understanding the differences between SIGKILL and SIGTERM, as well as how they’re used in various environments like Linux, Docker and Kubernetes, is crucial for developing robust and well-behaved applications.

By implementing proper signal handling, designing for graceful shutdowns and following best practices, you can ensure that your applications can be managed effectively and respond appropriately to termination requests.

Remember that while SIGKILL is a powerful tool, it should be used judiciously. Proper handling of SIGTERM in your applications will lead to more predictable behavior, easier debugging and better resource management in both traditional and containerized environments.

As you develop and deploy applications, remember these concepts and regularly review your signal handling code to ensure it meets the needs of your specific use cases and deployment environments.

See signal handling in action

Would you like to monitor how your apps respond to termination signals in real time? Try SUSE Cloud Observability free for 30 days on the AWS Marketplace and gain:

- Full-stack observability across your Kubernetes workloads

- Real-time insights into signal-driven shutdowns and anomalies

- A faster path to root cause when things go sideways

Related Articles

Jan 21st, 2026

Limit Volume Replica Actual Space Usage

Apr 24th, 2025

What Is Telco Cloud?

Nov 21st, 2024