Meltdownのライブパッチ – SUSEエンジニアの研究プロジェクト(パート2)

パート1:Meltdownのライブパッチの主な技術的障害に引き続いて、このブログでは、Meltdown脆弱性のコンテキストにおける仮想アドレスマッピングの予備知識について解説するとともに、kGraft自体へのパッチについて説明します。

メモリの仮想マッピングは、CPUが提供する保護機能の1つで、ユーザーモードとカーネルモードの間で特権を分離するために欠かせない機能となっています。

メモリへのアクセスが行われると、ユーザースペースからのアクセスでも、カーネルからのアクセスでも、CPUはそのターゲットアドレスをいわゆる「仮想」アドレスであると解釈します。CPUは、ページテーブルという特別なマッピング構造を使用して、仮想アドレスを物理アドレスにマッピングします。各プロセスは、独自の方法でメモリを使用するためのマップを備えています。特に、2つの異なるプロセスを実行する場合、同じ仮想アドレスを物理メモリの別々の領域にマッピングすることが可能であり(通常はマッピングされており)、2つのプロセスが互いに干渉するのを防止しています。

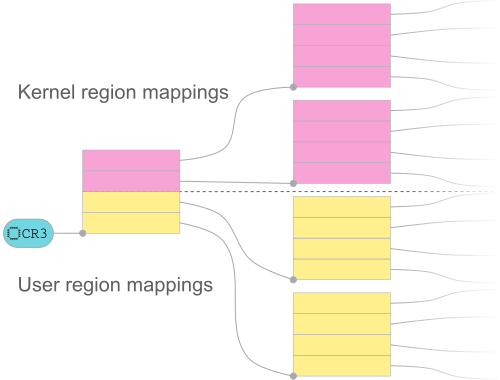

ページテーブルはツリー構造で編成されています。ここで、カーネルは特別なレジスタ(CR3)に、ツリーのルートを参照するポインタを書き込むことで、現在のアドレスマッピングをCPUに通知します。通常、この処理はプロセス間の切り替えを行うときに実行されます。

ページテーブルはツリー構造で編成されています。ここで、カーネルは特別なレジスタ(CR3)に、ツリーのルートを参照するポインタを書き込むことで、現在のアドレスマッピングをCPUに通知します。通常、この処理はプロセス間の切り替えを行うときに実行されます。

仮想アドレスから物理アドレスへの変換に加えて、いくつかのアクセス権も格納されています。マッピングされた仮想アドレスは、ユーザーモードからアクセス可能であるか、アクセス不可であるかのいずれかになります。

x86_64では、すべての使用可能な仮想アドレススペースが、Linuxカーネルによって2つの領域に分割されます。下位の範囲はユーザースペースに割り当てられ、上位の範囲はカーネルに割り当てられます。下位のユーザースペース領域におけるマッピングは、通常プロセスごとに異なりますが、カーネルスペースのマップは常に同一で、すべてのページテーブル間で同期されます。

ユーザースペースからカーネルに移行した場合、現在のページテーブルが維持され、特権レベルが昇格します。このため、カーネルは、ミラーリングされた任意のアドレスマッピングにアクセスできるようになります。

一方、ユーザーモードからカーネルスペース領域にアクセスすると、CPUはページテーブルに格納されたアクセス権を参照してこの違反を検出し、フォールト例外の処理に移行します。Meltdown脆弱性の問題点は、このアクセスが最終的には拒否されるにもかかわらず、CPUの投機的実行によって、キャッシュへの書き込みなどの間接的に識別できる副作用が発生してしまう点にあります。攻撃者は、この性質を利用して、メモリに格納された実際のデータを推測できます。

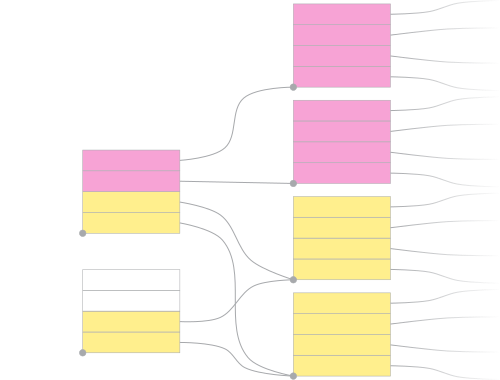

シャドーイングされたページテーブル:ユーザーコピーにはカーネル領域のマッピングが欠落

KPTIパッチセットは、「フル」ページテーブルそれぞれに対して、ユーザーモードで使用される軽量化されたバリアントを提供することで、この問題を緩和します。カーネルコードにおいて、これらの軽量化されたバリアント(別名「シャドーページテーブル」)では、元のページテーブルのユーザースペース領域マッピングがミラーリングされますが、カーネル領域のマッピングは含まれていません。ただし、エントリーコードに移行して、完全な情報を含む元のページテーブルに切り替えるために必要な、最小限のマッピングは例外です。

グローバル状態の達成

このようなユーザースペースのシャドーページテーブルを作成したら、メンテナンスを行って、派生元の完全なページテーブルとの一貫性を常に保つ必要があります。もちろん、この作業はカーネル内で関連のあるページテーブルの変更箇所すべてに従来のkGraftパッチを適用することで達成できます。

遷移していないタスクからページテーブルが操作されたために、シャドーコピーの一貫性が失われている

しかし、kGraftのタスク単位の一貫性モデルをグローバルな一貫性モデルに引き上げるには、いくつかの追加の手順が必要になります。たとえば、遷移期間の最中、AとBの2つのスレッドが、共通の仮想メモリスペースを共有しているとします。さらに、Aは新しい実装に切り替えられたが、Bはまだ切り替えられていないとします。ここで、Aが早まってシャドーページテーブルをインストールしてしまうと、Bのメモリマップの変更がシャドーページテーブルに反映されないため、すぐに古くなってしまいます。

ここで必要になるのが、グローバルなパッチ状態をライブパッチモジュールに問い合わせる仕組みです。つまり、新しい実装への遷移がすべてのタスクで完了しているかどうか尋ねる仕組みが必要になります。同様に、ライブパッチは、パッチ未適用状態への遷移が行われる直前に、その旨について通知を受ける必要があります。

しかし、kGraftの現在の実装では、そのような機能は提供されていません。そこで、これを何らかの形でエミュレートできないだろうかと考えました。そして、この処理が可能であることがわかりました。kGraftがkGraft自体にパッチを適用すればよいのです。

kGraftコアによって、すべてのタスクが「パッチ適用済み」状態に遷移したと判断されると、内部ハウスキーピング関数であるkgr_finalize()が呼び出されます。特に、kgr_finalize()がコンテキストで呼び出されるタスクは、そのタスク自体が新しい状態に遷移することになるので、kgr_finalize()のライブパッチ置換が存在すれば、その置換へのリダイレクトが行われることになります。この時点で、ライブパッチはkgr_finalize()の実装を修正して、グローバルなパッチ状態に関する通知を受けることができます。パッチ適用済み状態からパッチ未適用状態への遷移を開始する際のトレースにも、同様の処置を適用できます。

結論として、kGraft自体にパッチを適用することで、ライブパッチを内部の遷移プロセスにフックすることができ、自身の状態を追跡できるようになります。

この続きは、「パート3:アドレス変換バッファー(TLB)フラッシュプリミティブに必要な変更」をお読みください。

No comments yet