CRI-O Container Runtime on SUSE CaaS Platform 3

Interested in Kubernetes and container runtimes?

Great, here is what we have been working on with the open-source community!

With the release of version 3 of SUSE CaaS Platform, we are proud to announce CRI-O as an opt-in container runtime that we offer as a tech preview to our users. CRI-O is a fully Kubernetes CRI (Container Runtime Interface) and OCI (Open Container Initiative) compatible container runtime and is a lightweight alternative to traditional container runtimes. Using CRI-O is entirely transparent to Kubernetes end-users but it entails many non-functional benefits such as improved security, stability and maintainability that we will cover in this blog post.

CRI-O is developed by maintainers and contributors from various companies and SUSE is proud to be part of and contributing to this community with the ultimate goal of providing users a first-class enterprise-grade Kubernetes experience.

In the remainder of this blog post, we give an introduction to the CRI-O container runtime, how it can be used and how it is different from traditional container runtimes. We further explain how a SUSE CaaS Platform cluster can be configured to use CRI-O and show how we can perform common maintenance and container-management tasks on a CRI-O node.

Why CRI-O?

The primary goal of CRI-O has always been stability for Kubernetes. In the past, the stability of Kubernetes has suffered from the continuously evolving, more traditional container runtimes which led to the desire for a fully Kubernetes-dedicated container runtime, and eventually led to the birth of CRI-O. The community achieves this goal by limiting the scope and use-case of CRI-O to serving only the Kubernetes CRI and nothing else. This greatly reduces the amount of source code which reduces the attack surface of CRI-O and thus increases security while making it easier to maintain and test. As a consequence, each code change to CRI-O must pass the entire Kubernetes End-to-End test suite before being committed to the code repository.

The CRI-O community has learned a great deal from the technological achievements of the Docker open source project and even reuses parts of the source code, for instance, to support different storage drivers such as Btrfs, Overlayfs, devicemapper, and AUFS. The big difference between the two is the architecture. While the Docker open-source engine requires a substantial number of daemons to be running on the host system, CRI-O managed to reduce the number of running processes to a minimum. This difference stems from a different design approach but also from Docker serving a plethora of use-cases while CRI-O is limited to Kubernetes.

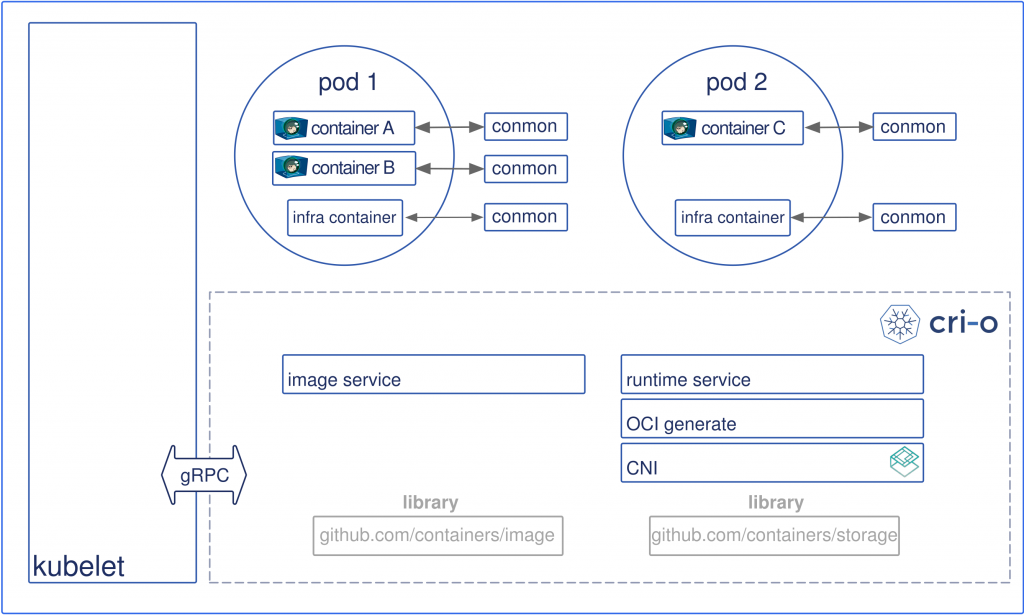

The figure below depicts the architecture of CRI-O where the kubelet communicates via gRPC remote-procedure calls with CRI-O. CRI-O implements the basic building blocks for the Kubernetes Container Runtime Interfaces, including services to push and pull OCI-compliant container images, to setup the CNI networking environment and to configure pods and containers. The containers can be executed by any OCI-compatible container runtime such as runc and Kata Containers, both of which are part of CRI-O’s testing vector. Each container is monitored by a separate conmon process, which handles logging of the container and records the exit code of the container’s process.

The architecture above makes CRI-O much slimmer compared to a feature-rich yet fat Docker daemon. When using Docker, the kubelet communicates with docker-shim which translates the CRI request into a Docker-API request for the Docker daemon which in turn communicates to containerd which finally launches runc and then pid1 of the container.

Using CRI-O on SUSE CaaS Platform 3

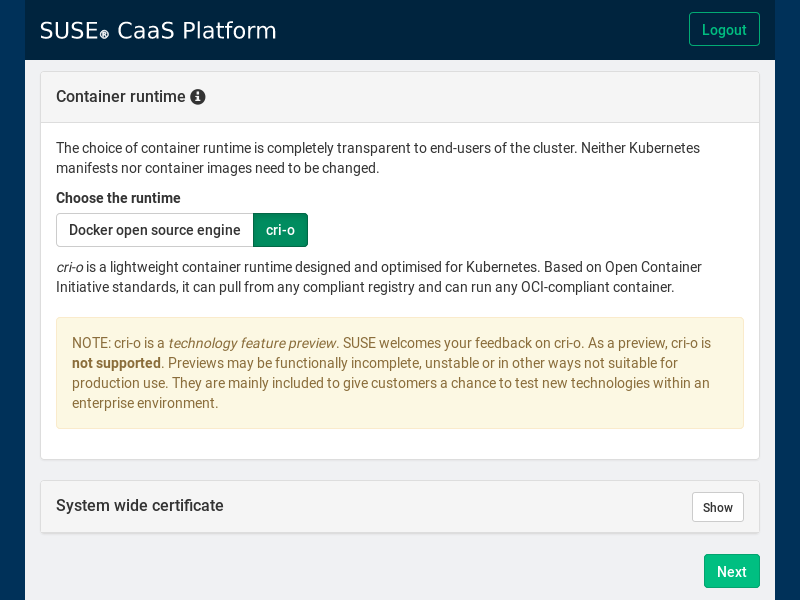

With the release of SUSE CaaS Platform 3, we offer CRI-O as an opt-in container runtime that can be selected in the initial configuration menu, where we can choose between the Docker open source engine and CRI-O.

As depicted in the screenshot above, CRI-O is shipped as part of a technology feature preview, while Docker remains the default choice and receives full support. SUSE wants to give you the chance to test CRI-O in an enterprise environment and to collect your feedback. The current plan is to promote CRI-O to be fully supported with SUSE CaaS Platform 4.

Working with CRI-O

From the perspective of a Kubernetes user, using CRI-O is entirely transparent and no functional difference compared to using the Docker open-source engine can be expected. Neither Kubernetes manifests nor container images need to be changed. But sometimes there are cases where we may need to debug a given pod or container, for instance, when trying to reproduce a failing MySQL database running in a container. Such maintenance tasks are best performed directly on the Kubernetes host.

On a Kubernetes host running with the Docker open source engine, we can use the Docker command-line client, for instance, to list currently running containers with docker ps or to execute a command in a container with docker exec. But when using CRI-O, we simply cannot use the Docker command-line client for various technical reasons. This is where CRICTL enters the stage. CRICTL is a command-line client communicating with a runtime endpoint implementing the Kubernetes CRI, which makes it a generic tool to debug and work with Kubernetes – independent of which CRI container runtime is being used. Let’s have a look at how we can use CRICTL to perform some common operations on a Kubernetes host.

First, let’s log into a machine running SUSE CaaS Platform. At login on the machine, we are greeted with some basic information about the node including the container runtime (i.e., CRI-O) that we have previously configured in the Velum dashboard when setting up the cluster:

Welcome to SUSE CaaS Platform! Machine ID: 331c55b79e7c44c88f1f7615db62a09b Hostname: worker-1 Container Runtime: CRI-O The roles of this node are: - kube-minion - etcd

Now let’s see which containers are currently running on the node, which we can do via crictl ps:

$ crictl ps CONTAINER ID IMAGE CREATED STATE NAME ATTEMPT 67cc18ec3e675 6f06a3d36408bc50[...] 4 days ago CONTAINER_RUNNING kubedns 0 5480ff45a71fc b4968854a7d16084[...] 4 days ago CONTAINER_RUNNING kube-flannel 0 91a1906ce76f2 2cb98d8e45f36556[...] 4 days ago CONTAINER_RUNNING haproxy 0

The crictl ps output looks similar to the output when using docker ps, but less verbose as some information such as the executed command and mapped ports are not displayed. If you are seeking such information, you can use crictl inspect on the specific container ID to list all kinds of details about the container including information about the state of the container, the used container image, open ports, mount points, and much more. If you want to look at the details of a specific image or POD, you can use crictl inspecti and crictl inspectp respectively.

Besides basic operations to gather information about running containers, CRICTL offers more powerful operations to manipulate the state of containers and the image storage:

- Image pulling via

crictl pull opensuse/opensuse:tumbleweed - Executing a command in a container via

crictl exec $CONTAINER $COMMAND - Getting a shell of a container via

crictl exec -it $CONTAINER sh

CRICTL is a powerful tool to perform various maintenance and container-management tasks on a Kubernetes node and offers a command-line interface that is similar to that of traditional container clients. However, CRICTL is closely tied to the Kubernetes CRI, which can make running a new container a bit cumbersome. This is why SUSE CaaS Platform ships Podman as a tool for pure debugging and maintenance purposes.

Podman is a command-line client to perform similar operations as the Docker client and can be seen as a drop-in replacement for Docker outside the context of Kubernetes to run, build and manage containers and container images. The command-line interface of Podman is nearly identical to the one of Docker, which enables users a seamless transition from one to another. Podman follows a similar design paradigm as CRI-O does but is entirely daemon-less. Podman and CRI-O share the same image storage, but at the time of writing, they do not yet share containers, which is something the community is currently working on to enable a seamless integration among the two tools. Our previously loaded alpine image can be run with Podman via podman run -it alpine, which gives us a shell of the container that we can use to debug or to perform certain maintenance tasks. If you are interested in reading more about Podman, please refer to another blog post on how to use Podman on openSUSE.

Call for Participation

SUSE is proud to offer CRI-O as a technical preview to SUSE CaaS Platform 3 users. When setting up a cluster, the container runtime can be configured to be CRI-O. We want to encourage users to try out CRI-O and to provide feedback on the experience. SUSE will continue and expand its efforts in the community to provide a fully community-driven, open-source container runtime that satisfies SUSE’s requirements of enterprise-grade software to provide a first-class Kubernetes experience.

Related Articles

Jul 13th, 2022

No comments yet